I want to tell you about a peculiar ritual that takes place in product marketing departments every quarter. A PMM disappears into a conference room with a competitor's website, three tabs of G2 reviews, a half-remembered anecdote from a sales call, and a vague sense of dread. Four weeks later, they emerge with a battlecard. The sales team glances at it, files it somewhere between "useful" and "I'll read it later," and continues winging competitive conversations based on instinct.

This is how most B2B companies do sales enablement. It's slow, it's expensive, and the output is based on what the PMM thinks buyers care about rather than what they actually do. The battlecard goes stale within weeks. The objection handling guide addresses objections the sales team stopped hearing months ago. The demo script showcases features in an order that makes sense to the product team but baffles the prospect.

There is, I'm pleased to report, a substantially better way to do this.

The Seven Things Your Sales Team Actually Needs

Before we get to the how, let's be honest about the what. Sales enablement has become a catch-all term for "things PMMs produce that sales teams are supposed to use." In practice, most of it doesn't get used. The reason is simple: the content doesn't reflect how buyers actually think and talk.

Strip it back to essentials, and your sales team needs exactly seven things:

A competitive battlecard – not a 30-page dossier, but a single page they can scan in 60 seconds during a live call. Why you win, where the competitor is strong, questions that expose gaps, and one-line rebuttals for the competitor's favourite claims.

An objection handling guide – every concern a buyer might raise, paired with an evidence-backed response. Not "here's what we wish they'd believe" but "here's what actually addresses their worry."

A customer evidence quote bank – organised by theme, with demographic context. "A 38-year-old marketing director in Chicago told us..." is infinitely more persuasive than "customers love our product."

A one-pager – your product described in the language customers use, not the language your product team uses. If it says "leverage synergies" anywhere, start again.

A pitch narrative – a story that follows the buyer's journey from problem to solution. Not a feature tour. A narrative.

An ROI framework – the cost of doing nothing versus the cost of your product, with a payback timeline that doesn't require a leap of faith.

A demo script – features presented in the order buyers said they'd want to see them, not the order your engineering team built them.

Traditionally, producing this kit takes three to six weeks. By the time it's finished, the competitive landscape has shifted, the sales team has developed their own workarounds, and the PMM is already behind on next quarter's deliverables.

Why Most Sales Enablement Fails

The fundamental problem is the input, not the output. Most sales enablement is built on three sources of dubious reliability: the competitor's own marketing (which is, by definition, optimistic), a handful of anecdotal win/loss conversations (small sample, fading memories), and the PMM's professional judgment (informed but unvalidated).

What's missing is the buyer's voice at scale. Not what five customers said in post-deal interviews conducted three months after the fact. What a representative sample of your target market thinks, feels, and would actually do when presented with your product versus the alternatives.

This is why battlecards based on competitor website analysis feel hollow. They tell your sales team what the competitor claims, not whether buyers believe it. There's a rather important difference.

The best sales enablement content passes what I'd call the ABC test:

Accuracy – one factual error and the entire card gets binned. Sales reps who've been burned by inaccurate collateral don't come back for seconds.

Brevity – if it can't be scanned in 60 seconds during a live call, it won't be used. A 10-page competitive analysis is less useful than a one-page battlecard precisely because nobody reads it when it matters.

Consistency – standardised format across all competitor cards. Reps should know exactly where to find each section without thinking about it.

The companies that report winning 20% more competitive deals with well-maintained battlecards aren't producing more content. They're producing content grounded in what buyers actually think.

The Sales Enablement Factory

Here's the bit that genuinely surprised me when we first tested it.

Ditto is a synthetic research platform with over 300,000 AI-powered personas. Each has a demographic profile, professional background, media diet, and coherent set of opinions. You ask them structured questions. They respond with the nuance and specificity of real research participants – but instantly, at a fraction of the cost, and without the logistical overhead of recruitment.

Claude Code is an AI agent that orchestrates multi-step workflows autonomously. It can design research studies, execute them via Ditto's API, extract structured insights from the responses, and generate formatted deliverables – all in a single automated sequence.

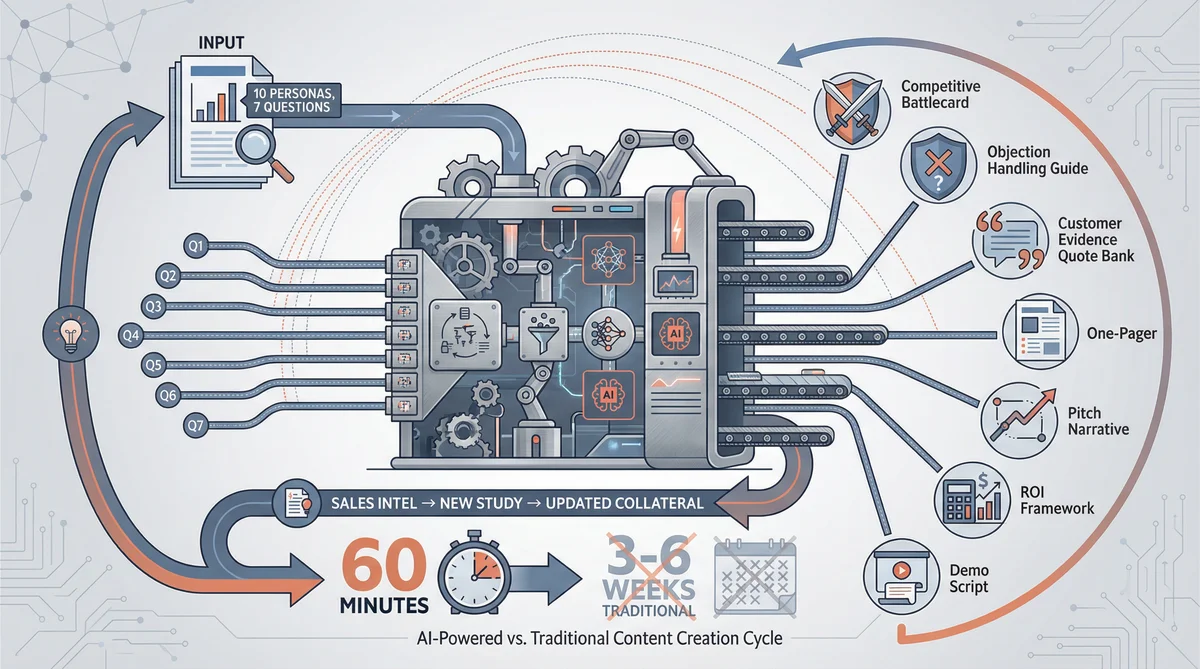

When you combine them, something rather striking happens: a single 10-persona, 7-question Ditto study produces all the raw material needed for all seven sales enablement deliverables. Claude Code then transforms that raw material into finished assets.

The timeline looks like this:

Design the study: 10 minutes

Run the study via Ditto: 20 minutes

Generate the battlecard: 5 minutes

Generate the objection guide: 5 minutes

Generate the quote bank: 3 minutes

Generate the one-pager: 5 minutes

Generate the pitch narrative: 10 minutes

Generate the ROI framework: 5 minutes

Generate the demo script: 5 minutes

Total: approximately 60 minutes. The traditional equivalent takes three to six weeks.

I should emphasise that these aren't thin, generated-from-templates deliverables. Every claim in the battlecard is backed by specific persona responses. Every objection in the handling guide was raised by a persona and addressed with evidence from the study. Every quote in the evidence bank includes demographic context. The study is the foundation. Claude Code is the builder.

The Seven Questions That Power Everything

The study design is where the magic happens. These seven questions are specifically engineered to produce raw material for all seven deliverables simultaneously. Each question does double or triple duty:

Q1: "You're evaluating solutions in [category] for your team. What's your biggest pain point right now? Walk me through how you currently solve it."

This feeds the pitch narrative (the "before" state), the one-pager (problem statement), and the demo script (what scenario to demonstrate).

Q2: "Compared to [Competitor A] and [Competitor B], what would make you choose a new option? What's the minimum bar?"

This is the core battlecard question. Decision drivers that favour you become "Why We Win." Decision drivers that favour the competitor become "Competitor Strengths" with response strategies.

Q3: "If someone presented [your product] to you, what would your first reaction be? What would excite you most? What would make you sceptical?"

Excitement responses power the one-pager. Scepticism responses feed directly into the objection handling guide. The split between excitement and scepticism tells you how much work your sales team has to do.

Q4: "What's the ONE thing you'd want this solution to do brilliantly? Why does that matter more than everything else?"

This determines the hero feature for your demo script. Not what your product team thinks is impressive – what buyers would queue up to see first.

Q5: "What would make you switch from your current solution? What's blocking you right now?"

Switching triggers become battlecard intelligence ("attack when this happens"). Blockers become objection handling content and inform the ROI framework (the cost of inaction).

Q6: "What would you expect to pay for this? What would feel like a steal versus too expensive?"

This powers the ROI framework directly. When personas tell you what they'd expect to pay and what the cost of not solving the problem is, you have both sides of the value equation.

Q7: "What would you need to see to feel confident recommending this to your boss or team? What proof matters most?"

The proof points buyers demand become the evidence your sales team leads with. If seven out of ten personas say they need to see a case study from their industry, that's not a suggestion – it's a mandate.

What the Deliverables Actually Look Like

Let me walk through what Claude Code produces from a single study, because the specificity matters.

The Battlecard

A one-page document with six sections. "Why We Win" lists the top three to four reasons personas chose your product, using their exact language, with the frequency count ("cited by 7 out of 10"). "Competitor Strengths" honestly acknowledges where the competitor excels – because sales reps who pretend competitors have no strengths lose credibility instantly – with a prepared response for each. "Landmine Questions" are high-impact questions designed to expose competitor gaps without sounding adversarial. "Quick Dismisses" are one to two sentence rebuttals for the competitor's most common marketing claims, grounded in the scepticism personas expressed. "Switching Triggers" identify the events that create openings: price increases, buggy releases, lost integrations.

The Objection Handling Guide

A structured table: objection category, the customer's actual concern (quoted from a persona), and the evidence-backed response. Not "here's what to say when they mention price" but "when they say 'it's too expensive,' four out of ten personas shared this concern – and here's what shifted their thinking." The difference between a scripted rebuttal and an evidence-backed response is the difference between dismissing a concern and genuinely addressing it.

The Pitch Narrative

Built on the StoryBrand framework: a customer (with a specific, study-validated problem) meets a guide (your company) who provides a plan (features in the order buyers said they'd use them), calls them to action, helps them avoid failure (objections addressed), and delivers success (outcomes personas identified). Every element of the narrative is grounded in what personas actually said, not what the PMM imagines a buyer's journey looks like.

The Demo Script

Features presented in buyer-priority order, not engineering-build order. When Question 4 reveals that eight out of ten personas want to see real-time reporting before anything else, the demo starts with real-time reporting – regardless of what the product team considers the most technically impressive feature. This single reordering routinely transforms demo effectiveness.

The Buyer Committee Problem (and How to Solve It)

Here's where it gets properly interesting. Most B2B purchases involve multiple stakeholders, and they care about different things. The CFO wants ROI and risk mitigation. The technical evaluator wants security and integrations. The end user wants something that doesn't make their life harder. The department head wants strategic advantage.

Traditional enablement produces one battlecard, one objection guide, one demo script – and hopes it covers everyone. It never does.

The Ditto approach is to run three to four parallel studies with different persona configurations, each representing a different buyer type. The same seven questions, asked to economic buyers, technical evaluators, end users, and executive sponsors, produce dramatically different responses. Claude Code then generates persona-specific versions of each deliverable.

The result: your sales rep walks into a meeting with the CFO armed with an ROI framework that addresses finance-specific concerns, a battlecard that leads with total cost of ownership, and a demo script that opens with the dashboard the CFO would actually use. The next meeting, with the engineering lead, uses entirely different collateral addressing security, API documentation, and integration architecture.

The marginal cost of running three additional studies is approximately 60 minutes of compute time. The traditional cost of producing persona-specific enablement for four buyer types is roughly "we'll get to it next quarter" – which in practice means never.

The Feedback Loop That Makes It Compound

The real advantage isn't the speed of the initial deliverable. It's the iteration cadence.

Traditional enablement operates on a quarterly refresh cycle at best. The PMM updates the battlecard when someone remembers to ask for it, usually triggered by losing a deal to a competitor they hadn't prepared for. By the time the update ships, the landscape has shifted again.

With Ditto and Claude Code, the feedback loop tightens to weeks rather than quarters:

Month 1: Run the baseline study. Generate all seven deliverables. Distribute to the sales team.

Month 2: Sales reports new objections from the field. Run a targeted objection study (five questions, eight personas). Update the objection guide and battlecard in an afternoon.

Month 3: Competitive landscape shifts – a competitor launches a new feature or changes pricing. Run a competitive perception study. Refresh the battlecard with current market sentiment.

Quarterly: Full refresh. Rerun the seven-question study with fresh personas. Compare results to the previous quarter. Identify perception trends. Update everything.

The compounding effect is significant. After four quarters, you have a longitudinal dataset on how market perception of your competitors is evolving. Your battlecards aren't snapshots – they're living documents backed by trend data.

Measuring Whether Any of This Actually Works

Sales enablement is notoriously difficult to measure, mostly because companies track the wrong things. Content downloads are a vanity metric. The battlecard getting 500 downloads means nothing if those downloads don't correlate with improved win rates.

The metrics that actually matter:

Win rate against specific competitors. Before and after deploying the battlecard. This is the only metric that directly validates whether your competitive enablement is working. If the win rate against Competitor A doesn't improve after deploying a battlecard specifically designed for that competitor, either the battlecard needs revision or the problem is elsewhere in the sales process.

Average sales cycle length. Better objection handling and demo scripts should shorten the cycle. If your deals close faster after deploying new enablement content, you've removed friction from the buying process.

Sales content utilisation. Are reps actually using the collateral? If utilisation is low, the content isn't credible or accessible enough. This is a diagnostic metric, not an outcome metric – but it's essential for debugging underperformance.

Qualitative feedback from the sales team. Numbers don't capture everything. A quarterly survey asking reps "What's the most useful enablement content you received this quarter?" and "What's missing?" provides signal that quantitative metrics miss. If the sales team says the battlecard helped them close three deals, that's worth knowing even if the sample is small.

Maintain a 60/40 split between leading indicators (content utilisation, rep feedback) and lagging indicators (win rates, cycle length). The leading indicators tell you whether the enablement is being adopted. The lagging indicators tell you whether adoption is producing results.

The Enablement Problem Was Never About Effort

Product marketing teams work extraordinarily hard on sales enablement. The problem has never been insufficient effort. It's been insufficient input. Battlecards built on competitor websites and PMM intuition are, at best, educated guesses about what buyers think. They're often quite good guesses – PMMs are smart people – but they're guesses nonetheless.

The shift that Ditto and Claude Code enables is from assumption-based enablement to evidence-based enablement. Every claim in the battlecard is backed by a specific persona response. Every objection in the handling guide was raised by someone who matches your buyer profile. Every feature in the demo script was prioritised by the people who'd actually use the product.

The traditional timeline for producing a complete sales enablement kit is three to six weeks. The Ditto and Claude Code timeline is roughly the length of a long lunch. I realise that sounds like the sort of breathless claim I'd normally be sceptical of, but the maths is genuinely that simple: one study, seven questions, ten personas, seven deliverables, sixty minutes.

The sales team gets collateral grounded in buyer reality rather than PMM conjecture. The PMM gets their quarter back. And the buyers get sales conversations that actually address what they care about, in language they recognise as their own.

Which, if you think about it, is what sales enablement was always supposed to be.