There is a quietly devastating statistic that haunts every product marketing team I've spoken to: the average company conducts formal customer research once or twice a year. Occasionally three times, if they're feeling particularly ambitious. The rest of the time, they rely on a volatile cocktail of sales anecdotes, support ticket themes, the occasional NPS survey, and whatever the product manager overheard at last month's user conference.

This is how most organisations listen to their customers. Intermittently, expensively, and with a lag time that would be comical if it weren't so consequential. By the time the research agency delivers its findings, the market has moved. The insights are already historical artefacts - interesting, certainly, but about as useful for next week's positioning decision as a weather report from October.

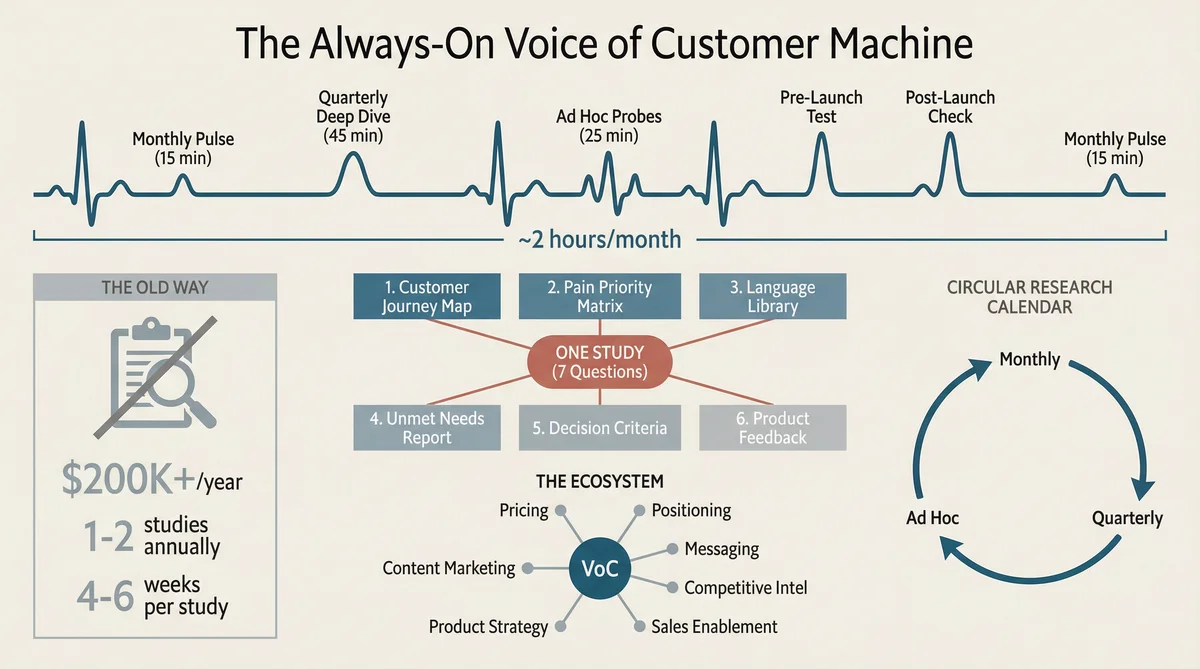

Voice of Customer - VoC, in the jargon - is supposed to be the backbone of product marketing. It feeds positioning, messaging, competitive intelligence, product roadmaps, and sales enablement. It is, in theory, the thing that stops you from building products nobody wants and writing copy nobody believes. And yet most companies treat it like a dental check-up: something you know you should do regularly but somehow only manage annually, with a vague sense of guilt in between.

What if VoC didn't have to work this way?

What Voice of Customer Actually Means (and Why Most Programmes Miss the Point)

Let's be precise about what we mean, because "Voice of Customer" has become one of those terms that means everything and therefore nothing. People use it to describe anything from a post-purchase email survey to a six-month ethnographic study. The breadth is part of the problem - when VoC means everything, it becomes nobody's specific responsibility.

At its core, VoC is the systematic process of capturing what your customers and target market think, feel, want, and struggle with - and translating those signals into actions. Not into PowerPoint slides. Into actual decisions about what to build, how to position it, what to say about it, and who to sell it to.

The traditional VoC toolkit includes:

Surveys (NPS, CSAT, CES) - quantify satisfaction, identify patterns at scale

In-depth interviews - explain why the patterns exist

Focus groups - small group discussions exploring perceptions and reactions

Social media listening - sentiment analysis across platforms

User testing - direct observation of product usage

Review mining - extracting insights from G2, TrustRadius, Capterra, and app stores

Support ticket analysis - mining customer service conversations

Customer advisory boards - structured dialogue with your most engaged users

Each of these methods is valuable. Each is also slow, expensive, or both. An in-depth interview programme with 15-20 participants - the number typically required to reach saturation - takes four to six weeks and costs $15,000 to $30,000 through a research agency. A single focus group runs $5,000 to $10,000. A comprehensive VoC programme covering quarterly studies, monthly pulse checks, and ad hoc probes easily reaches $200,000 a year.

At those costs and timelines, VoC becomes a luxury rather than a habit. And when research is a luxury, companies default to intuition. Which is how you end up with messaging that sounds plausible to the marketing team but bewildering to the people you're supposedly talking to.

The Three Reasons Traditional VoC Programmes Fail

I've watched enough VoC programmes to identify three recurring failure modes. They're all connected, and they all stem from the same root cause: the economics don't support the frequency you need.

Failure 1: The Research Is Too Infrequent to Be Useful

Markets move fast. Customer preferences shift. Competitors launch new features. Macroeconomic conditions change buying behaviour. A VoC study conducted in January may be substantially wrong by April. Yet most companies operate on annual or biannual research cycles because that's all the budget allows.

The result is that product marketing decisions are made on stale data dressed up as current insight. The positioning framework you're working from was validated nine months ago against a market that no longer exists in quite the same shape.

Failure 2: The Insights Never Reach the People Who Need Them

A research report that sits in a shared drive is not Voice of Customer. It's a document. VoC only matters when it changes behaviour - when the sales team adjusts their pitch, when the product team reprioritises the roadmap, when the content team shifts its messaging.

Traditional VoC programmes produce beautifully formatted 80-page reports. These reports get presented in a meeting, generate a week of enthusiasm, and then quietly decompose in a folder nobody opens again. The insights are real. The impact is minimal.

Failure 3: The Gap Between Signal and Action Is Too Wide

Even when insights are timely and well-distributed, most organisations lack a mechanism to translate VoC findings into specific, actionable changes. Knowing that "customers find our onboarding confusing" is different from rewriting the onboarding messaging, updating the demo script, and briefing the sales team on the new talk track.

The gap between "interesting finding" and "changed deliverable" is where most VoC programmes go to die. Not because anyone disagrees with the findings. Because nobody has the time to do anything about them.

Building an Always-On VoC Machine

Here's the proposition that I appreciate sounds unreasonably optimistic, so I'll ask you to bear with me while I explain the mechanics.

Ditto is a synthetic research platform with over 300,000 AI-powered personas, each grounded in census data and behavioural research, spanning 15+ countries covering 65% of global GDP. You recruit a panel, ask structured questions, and get qualitative responses with the nuance of real interview participants - in minutes rather than weeks, at a fraction of the cost. The methodology shows 92% overlap with traditional focus groups and 95% correlation with traditional research, validated by Harvard, Cambridge, Stanford, and Oxford researchers.

Claude Code is an AI agent that orchestrates multi-step workflows. It designs studies, recruits personas, asks questions, interprets responses, and generates structured deliverables - autonomously.

When you combine them, VoC stops being an event and becomes a continuous programme. The economics shift so dramatically that the question changes from "can we afford to run research this quarter?" to "why wouldn't we run research this week?"

A single VoC study - 10 personas, 7 questions - takes approximately 20 minutes to run. The insight extraction and deliverable generation takes another 15 to 25 minutes. Total elapsed time: under 45 minutes. Traditional equivalent: four to six weeks and $15,000 to $30,000.

At those economics, you can afford to make VoC a habit.

The Seven Questions That Unlock Everything

The study design is where craft meets science. These seven questions are specifically engineered to produce the raw material for every VoC output a product marketing team needs. Each question does multiple duty, feeding different deliverables simultaneously.

Q1: "Tell me about the last time you [relevant activity]. Walk me through the experience from start to finish. What went well? What was frustrating?"

This is experience mapping in a single question. The persona reconstructs a real scenario, revealing touchpoints, pain moments, and delight moments. The specificity of "walk me through" prevents vague generalisations and forces concrete, actionable detail. This feeds customer journey maps and product feedback synthesis.

Q2: "If you could wave a magic wand and fix ONE thing about [problem space], what would it be? Why that above everything else?"

The constraint of "one thing" forces prioritisation. When ten personas each name their single most important fix, you get a ranked pain priority matrix that reflects genuine severity, not the laundry list of complaints you'd get from an open-ended "what frustrates you?" question.

Q3: "How do you currently solve [problem]? What tools, people, or workarounds do you use? What do you wish you could do differently?"

This reveals the competitive landscape as it actually exists - not just direct competitors but spreadsheets, manual processes, hiring decisions, and doing nothing. April Dunford's positioning framework begins with competitive alternatives, and this question maps them from the customer's perspective rather than the product team's assumptions.

Q4: "Think about the best [product/service] experience you've ever had in any category. What made it great? Now think about the worst. What made it terrible?"

This is expectation benchmarking. Customers don't evaluate your product in isolation - they compare it against their best and worst experiences across all categories. The insurance company that delighted them sets the bar for your onboarding. The airline that infuriated them defines what "poor communication" means. Understanding these reference points tells you what standard you're actually being measured against.

Q5: "When you're researching a new [product type], what do you look for first? Second? What's a dealbreaker?"

This maps the purchase decision framework from the buyer's perspective. It reveals which evaluation criteria matter most, in what order, and which are non-negotiable. This feeds directly into sales enablement, demo scripts, and one-pagers - because you now know what to lead with and what to never omit.

Q6: "If a product promised to [core value prop], how would you want to experience that? In the product itself? Through reports? Through a person helping you?"

Delivery preference is massively underresearched. Most companies assume their customers want a self-serve dashboard because that's what the product team built. But some segments strongly prefer a human touchpoint, or a weekly email digest, or an in-app notification. Getting this wrong means customers never discover the value you've built for them.

Q7: "Is there anything about [problem space] that you feel companies just don't understand? What do you wish they'd get right?"

This is the white space question. It surfaces unmet needs, frustrations with the category as a whole, and opportunities that competitors haven't addressed. These insights are gold for positioning ("unlike everyone else, we actually understand that...") and for product strategy ("they're all asking for X, and nobody offers it").

What You Actually Get From a VoC Study

Claude Code transforms the raw persona responses into six distinct deliverables, each designed to be immediately actionable by a different team:

Customer journey map - key touchpoints, pain moments, and delight moments reconstructed from the experience mapping question. Not a theoretical journey designed in a workshop - an evidence-backed map of what actually happens.

Pain priority matrix - customer frustrations ranked by severity and frequency, with supporting quotes from each persona. The product team gets a prioritised list of problems to solve. The marketing team gets language for messaging. The sales team gets proof that you understand the prospect's world.

Language library - the exact words and phrases customers use when talking about their problems, needs, and evaluation criteria. This is arguably the most commercially valuable output: messaging written in customer language consistently outperforms messaging written in company language. Every word in this library came from a persona, not a copywriter.

Unmet needs report - gaps that no one is addressing, opportunities hiding in plain sight. When seven out of ten personas independently identify the same unaddressed frustration, you've found a positioning opportunity that your competitors are sleeping on.

Decision criteria hierarchy - what matters most to least when buyers evaluate solutions in your category. This feeds sales enablement directly: lead with what buyers rank first, not what your product team thinks is most impressive.

Product feedback synthesis - actionable recommendations structured for the product team. Not "customers want it to be better" but "eight out of ten personas independently identified [specific thing] as the primary barrier to adoption, suggesting it should be addressed before [other feature]."

The critical distinction here is that every output is traceable to specific persona responses. There's no black box. The product manager can read the original answers. The sales rep can quote a specific persona. The CEO can see the raw data behind the summary. This traceability is what makes people trust the findings enough to act on them.

Building the Research Calendar

An always-on VoC programme doesn't mean running research every day. It means having a cadence that matches the rate at which your market changes, so your understanding never falls critically out of date.

Here's what the calendar looks like in practice:

Monthly pulse checks (3 questions, 6 personas, ~15 minutes) - Track shifting priorities and emerging themes. Are the same pains dominant, or is something new surfacing? These are your early warning system.

Quarterly deep dives (7 questions, 10 personas, ~45 minutes) - Comprehensive understanding refreshed every 90 days. Each quarter's study uses the same question framework, enabling trend analysis across time periods.

Ad hoc targeted probes (5 questions, 8 personas, ~25 minutes) - Investigate specific signals. A competitor just launched a new feature? Run a probe. Sales is hearing a new objection? Test it. A pricing change is under discussion? Validate willingness to pay.

Pre-launch concept tests (7 questions, 10 personas, ~45 minutes) - Before committing engineering resources or marketing budget, validate that the market wants what you're about to build or say.

Post-launch sentiment checks (5 questions, 10 personas, ~30 minutes) - Two weeks after launch, measure market reaction. Did the messaging land? Is the feature understood? Where are the gaps?

Total time investment: approximately two hours per month. A traditional VoC programme at this frequency would cost north of $200,000 annually in agency fees and recruitment alone.

The compounding effect is the real prize. After four quarters of consistent deep dives, you have a longitudinal dataset that shows how customer priorities, pain points, and competitive perceptions are evolving. You're not making positioning decisions based on a snapshot - you're working from a trend line. The difference in confidence is striking.

Cross-Segment and Cross-Market VoC

Here's where the economics become properly interesting.

Traditional VoC forces you to choose: research SMB buyers or enterprise buyers. North American customers or European customers. Technical evaluators or economic buyers. The budget simply doesn't stretch to cover all segments with the depth each deserves.

With Ditto spanning 15+ countries and supporting granular demographic filtering - country, state, age, gender, education, employment, parental status - you can run the same study across multiple segments simultaneously. Claude Code orchestrates parallel studies and produces comparative analysis automatically.

Imagine running the same seven VoC questions against four groups:

US adults aged 25-35 (digital-native buyers)

US adults aged 40-55 (established professional buyers)

UK adults aged 25-50 (European market comparison)

Canadian adults aged 25-50 (North American variation)

The same questions. Four groups. Four sets of responses. Claude Code then produces a cross-market comparison matrix showing where perceptions align and where they diverge. Perhaps US buyers rank price sensitivity highest whilst UK buyers prioritise data privacy. Perhaps younger buyers discover products through social media whilst older buyers rely on peer recommendations.

This kind of comparative insight typically requires commissioning four separate research agencies across three countries. With Ditto and Claude Code, it takes approximately one hour. The implications for international expansion strategy, localised messaging, and segment-specific positioning are significant.

How VoC Feeds Every Product Marketing Function

The reason VoC is the backbone of product marketing isn't philosophical - it's architectural. Every other PMM function depends on customer understanding as its primary input. When VoC is rich, current, and accessible, everything downstream improves. When VoC is thin, stale, or absent, every other function is building on sand.

Here's the dependency map:

Positioning - requires understanding competitive alternatives (VoC Q3), what customers value most (VoC Q2), and what language they use (VoC language library). April Dunford's five-component framework simply cannot be executed without VoC data.

Messaging - the language library from VoC studies provides the raw material for messaging that resonates. Messaging written in customer language outperforms messaging written in company language consistently. Every message test is more effective when grounded in VoC.

Competitive intelligence - VoC reveals how buyers actually perceive competitors, which is often dramatically different from how competitors position themselves. The competitor's website says "industry-leading AI." Your VoC study reveals that buyers think their AI is "clunky but adequate." That's battlecard intelligence.

Sales enablement - objection handling guides need real objections. Demo scripts need buyer-prioritised feature order. One-pagers need customer language. All of these come from VoC.

Product strategy - the unmet needs report and decision criteria hierarchy feed directly into roadmap discussions. When eight out of ten personas identify the same unaddressed pain, that's a product decision backed by market evidence.

Content marketing - every VoC study produces publishable insights. The quotes, statistics, and patterns become blog posts, social content, and thought leadership pieces. Research-backed content with original data dramatically outperforms generic advice articles for both SEO and engagement.

Pricing - VoC reveals willingness to pay, price sensitivity, and the perceived value-price relationship. These signals inform pricing strategy with market evidence rather than internal assumptions.

When VoC runs continuously, all of these downstream functions receive a steady stream of fresh input. Positioning gets validated quarterly. Messaging stays grounded in current language. Battlecards reflect this month's competitive perception, not last year's.

Making VoC Actionable (Because Research Without Action Is Just Expensive Reading)

The most common failure mode of VoC programmes - traditional or otherwise - is the gap between insight and action. A finding that "customers are confused by our pricing page" is useless unless someone rewrites the pricing page.

The Claude Code advantage here is underappreciated. Because the agent doesn't just collect insights - it produces deliverables from them - the action gap shrinks dramatically. The VoC study doesn't produce a report that someone needs to interpret and translate. It produces:

Updated messaging recommendations ready for the copywriter

Revised objection handling content ready for the sales team

Product feedback structured for the roadmap discussion

A publishable blog post with original research data

Competitive perception updates for the battlecard

The research and the output are produced in the same workflow. There's no handoff. No interpretation layer. No six-week gap between "we learned something" and "we did something about it."

This is a genuinely different operating model. Traditional VoC asks: "What did we learn?" This approach asks: "What did we learn, and here are the updated assets that reflect it."

Measuring Whether Your VoC Programme Actually Works

A VoC programme that can't demonstrate impact will eventually lose its budget. Here's what to track:

Decision frequency. How often are VoC findings cited in product, marketing, and sales decisions? If the answer is "rarely," the insights aren't reaching the right people or aren't compelling enough to change behaviour. Track how many times per quarter a stakeholder references VoC data in a meeting, document, or Slack message.

Time from insight to action. Measure the gap between a VoC finding and the resulting change. If the September study identifies a messaging problem and the messaging isn't updated until December, the programme isn't fast enough. With Claude Code generating deliverables from study data directly, this gap should be measured in days, not months.

Downstream metric improvement. Track the metrics that VoC should influence: win rates (are they improving since you started grounding battlecards in buyer perception?), sales cycle length (is it shortening since the demo script was reordered?), content engagement (is research-backed content outperforming opinion pieces?), feature adoption (are products built on VoC insights adopted faster?). Maintain a 60/40 split between leading indicators and lagging indicators.

Research coverage. Are you researching all the segments that matter, or just the one you default to? If your product serves SMB and enterprise customers but you've only run VoC on enterprise buyers, you're flying blind on half your market. Track which segments have been researched in the last 90 days.

Trend detection rate. The ultimate test of an always-on VoC programme: did you spot a market shift before your competitors did? If your monthly pulse check in February reveals that buyer priorities are shifting and you update your positioning before anyone else notices, that's a concrete competitive advantage attributable directly to your VoC cadence.

The Research Habit

The insight that changed how I think about VoC is deceptively simple: the value of customer research is proportional to its frequency, not its depth. A lightweight study run monthly is more valuable than an exhaustive study run annually, because markets change faster than annual research can track.

Traditional VoC economics forced companies into the wrong tradeoff. You could have depth or frequency but not both, so sensible people chose depth. Run one big study. Make it count. Squeeze every insight out of it. Then coast for six months until the budget refreshes.

The combination of Ditto's synthetic research and Claude Code's autonomous orchestration eliminates the tradeoff. You can have depth and frequency. A 10-persona, 7-question deep dive takes 45 minutes and costs a fraction of a traditional study. Run it monthly. Run it for different segments. Run it across markets. Run it before every launch and after every competitive move.

When research takes 45 minutes instead of six weeks, you stop treating it as an event and start treating it as a habit. And when VoC becomes a habit, everything built on top of it - positioning, messaging, sales enablement, product strategy - becomes more grounded, more current, and more effective.

The $200,000 annual research budget gets replaced by two hours a month. The quarterly refresh cycle gets replaced by a continuous feed. The 80-page report gets replaced by actionable deliverables produced in the same workflow as the research itself.

Your customers are talking. The question is whether you're listening often enough to hear what they're actually saying.