Product research has a credibility problem. Not the research itself, but the absence of it. Ask any product manager what validated their last major feature decision and you'll get some combination of competitive benchmarking, stakeholder opinions, and whatever the loudest person in the room said during the offsite. The methodology, such as it is, could be charitably described as 'vibes-based prioritisation.'

This isn't a character flaw. It's a time constraint. Comprehensive customer research historically meant £15,000-50,000 and 6-8 weeks of lead time. When you're shipping weekly, that timeline makes research a strategic luxury rather than an operational tool. So teams ship based on intuition, then retrofit the 'customer insight' narrative afterward.

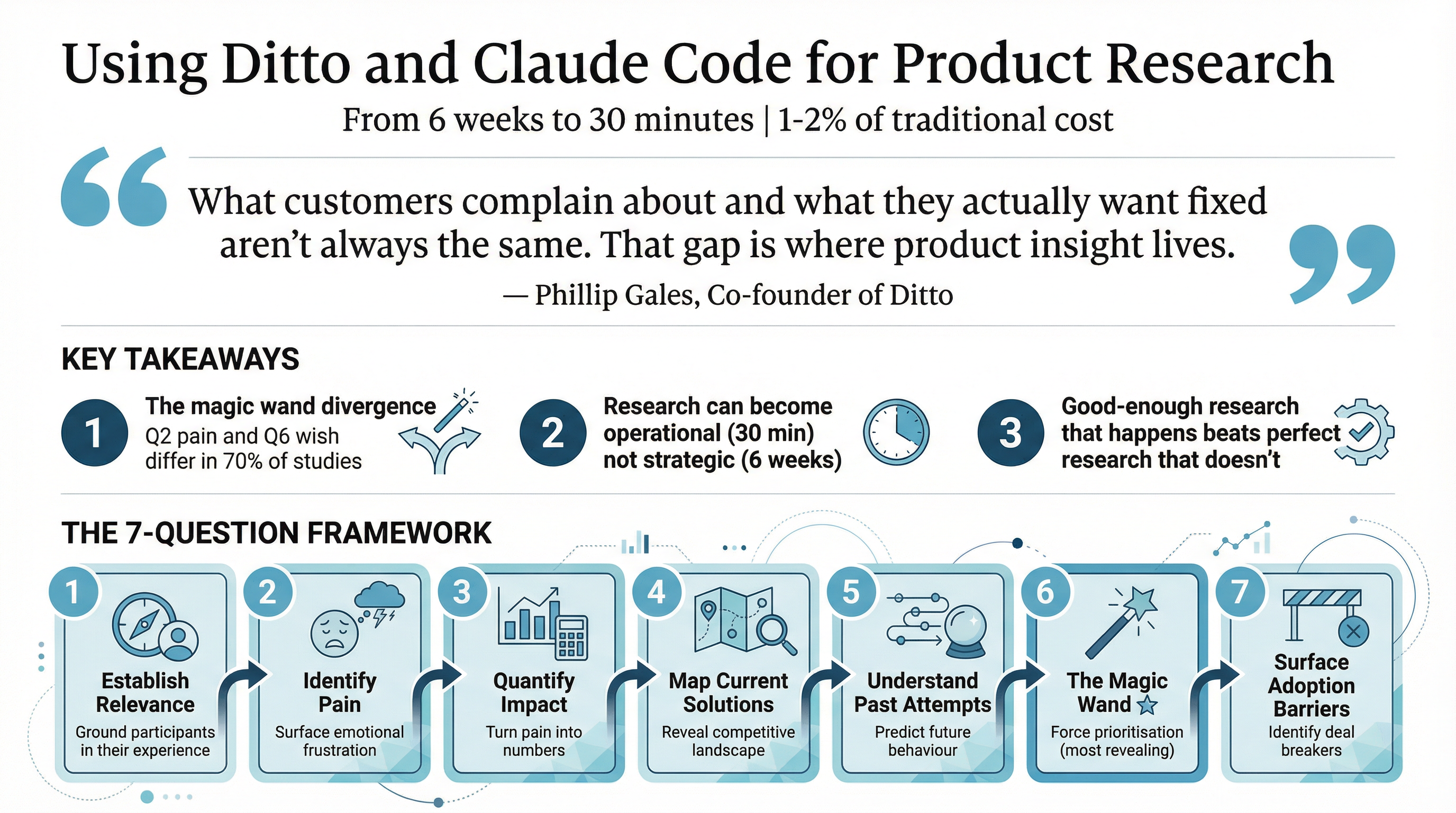

But something has shifted. The same AI acceleration that's transformed software development is now coming for the research bottleneck. Claude Code, Anthropic's terminal-based coding agent, can now orchestrate complete customer research studies through the Ditto synthetic persona platform. 10 demographically-filtered consumers, 7 validated questions, actionable insights, shareable report. 30 minutes. From your terminal.

This isn't incremental. It's a category-level change in how product decisions get made.

What Actually Happens When You Run Research From the Terminal

Let me walk through what this looks like in practice. You're sitting in Claude Code, working on a pricing page redesign. You've got three price points under consideration and no conviction about which will convert best. Historically, you'd either A) guess, B) run an A/B test that takes 3 weeks to reach significance, or C) commission a research study that arrives after the decision deadline.

Now you type a natural language prompt:

Run a pricing study for our SaaS product. Test price sensitivity between $19/month, $29/month, and $49/month with 10 US professionals aged 25-45 who work in marketing. I want to understand their gut reaction to each price point and what would make them hesitate.

Claude Code reads the Ditto skill I've built, which contains the complete research workflow. It then executes six sequential API calls:

Creates a research group with your demographic filters (country, age, profession)

Creates a study with your research objective

Designs questions using a validated 7-question framework

Polls for responses as 10 synthetic personas answer each question

Triggers AI analysis to extract themes, segments, and divergences

Generates a shareable link anyone can view without an API key

The whole process takes 15-30 minutes. You get back a summary of findings, direct quotes from 10 personas with different perspectives, and a URL you can drop into Slack for your team to review. The research is done before your coffee gets cold.

Why Synthetic Personas Are More Useful Than You'd Expect

I'll acknowledge the immediate objection: 'These aren't real people.' Correct. They're AI-powered personas calibrated against census data and behavioural research from Harvard, Cambridge, Stanford, and Oxford. Ditto maintains over 300,000 of them across four countries.

The relevant question isn't whether they're 'real' but whether they're useful. EY ran a validation study comparing Ditto's synthetic responses to traditional focus groups across multiple research scenarios. The statistical overlap was 92%.

More importantly, synthetic personas solve the problems that make traditional research impractical:

Availability: They respond at 3am on a Sunday. No recruitment cycles, no scheduling nightmares, no participants who don't show up.

Consistency: You can ask follow-up questions to the same panel without participant fatigue or memory effects.

Speed: A 7-question study with 10 personas completes in under 30 minutes. The traditional equivalent takes 4-8 weeks.

Cost: Roughly 1-2% of traditional research costs. This means you can run 50 small studies instead of one big one.

Iteration: When findings from Phase 1 surprise you, Phase 2 can start immediately with the same or a fresh panel.

The paradigm shift isn't 'synthetic vs real.' It's 'rapid, frequent research vs occasional, expensive research.' Good-enough research that happens beats perfect research that doesn't.

The 7-Question Framework That Produces Useful Findings

The difference between useful Ditto research and wasted API calls is almost entirely in the questions. After running 50+ production studies across startup validation, CPG consumer research, political voter sentiment, and B2B product feedback, a clear pattern has emerged. Seven questions, asked in sequence, consistently surface actionable insights:

Establish relevance: 'Walk me through how you currently handle [task]. What does a typical week look like?' This filters out irrelevant participants and establishes baseline behaviour.

Identify pain: 'What's the most frustrating part of [task]? What makes you want to throw your laptop out the window?' The vivid metaphor gives personas permission to express genuine frustration.

Quantify impact: 'Roughly how much time or money do you lose to [problem] per week?' This turns qualitative pain into numbers for ROI calculations.

Map current solutions: 'What tools or workarounds do you currently use? What works? What doesn't?' This reveals the real competitive landscape, including Excel spreadsheets and manual processes.

Understand past attempts: 'Have you tried switching to something new? What happened?' Past behaviour predicts future behaviour. Switching stories reveal triggers and barriers.

The magic wand: 'If you could fix ONE thing about [task], what would it be?' Consistently the most revealing question. The constraint forces prioritisation.

Surface adoption barriers: 'What would make you hesitant to try a new solution, even if it saved you time?' This identifies deal breakers before you build.

The magic wand question deserves special attention. In 7 of 10 startup due diligence studies I've run, the magic wand answer diverged from the pain identified in question 2. What customers complain about and what they actually want fixed aren't always the same. That gap is where product insight lives.

Real Results: What This Looks Like in Production

Let me share some concrete examples from actual research conducted through this workflow.

CareQuarter: A Startup Validated in Four Hours

CareQuarter is an elder care coordination service for the 'sandwich generation,' working adults aged 45-65 managing healthcare for aging parents. The entire concept was validated through three Ditto research phases, 32 personas, and 21 questions. Total elapsed time: four hours.

Phase 1 (Pain Discovery) revealed something unexpected. The hypothesis was that customers struggled with time burden. The actual finding: they struggled with responsibility without authority. They were the 'human API' stitching together a healthcare system that refuses to talk to itself, but had no legal standing to actually act.

Phase 2 (Deep Dive) established the trust architecture. Customers wanted HIPAA-only access first (power of attorney was too much trust too fast), a named coordinator (not rotating teams), and phone/paper communication (not another app, they have too many portals already).

Phase 3 (Concept Test) validated pricing. $175/month for routine coordination, $325/month for complex needs. Every single persona confirmed these were within acceptable range. Not 'probably reasonable.' Validated.

The winning positioning: 'Stop being the unpaid case manager.' It hit hardest because it named the pain without patronising. The entire product concept, landing page, and pitch deck were built on research conducted that afternoon.

ESPN DTC: The Pricing Cliff

A hedge fund needed to understand consumer price sensitivity for a hypothetical standalone ESPN streaming service. We ran 64 personas across four price points. The results were stark:

At $9.99/month: 65.7% likely to subscribe

At $14.99/month: 42.1% likely

At $19.99/month: 18.4% likely

At $29.99/month: 6.3% likely

There's a cliff between $14.99 and $19.99. That finding directly informed a trading position on Disney stock. Research that would have taken a traditional firm 6 weeks was completed in an afternoon.

10 Startup Due Diligence Studies

I've run systematic customer validation for startups across veterinary software, auto parts inventory, cybersecurity compliance, healthcare communication, and more. A consistent pattern emerges:

Trust before features: In 8 of 10 studies, trust-building mechanisms outranked feature capabilities in purchase decisions

Integration is non-negotiable: B2B customers assume your tool works. What they worry about is whether it fits their existing stack

Price is rarely the real objection: When customers say 'too expensive,' they usually mean 'I don't trust this enough to justify the switching cost'

The magic wand divergence: What customers complain about in Q2 and what they wish for in Q6 are different things. Build for the wish.

Getting Started: The Practical Setup

If you want to replicate this workflow, here's what you need:

1. Claude Code

Anthropic's terminal-based AI agent. It reads skills files that teach it specialised workflows, then executes them autonomously. If you're already using Claude Code for development work, you're halfway there.

2. The Ditto Skill

I've published a complete skill package that teaches Claude Code the entire Ditto workflow. Install it with one command:

npx skills add Ask-Ditto/ditto-product-research-skill

The skill includes 1,266 lines of documentation: the 6-step API workflow, the 7-question framework, demographic filter reference, common mistakes, question design playbooks for different research types, and a complete 3-phase worked example.

3. A Ditto API Key

Free tier requires no credit card and no sales call. Run this in your terminal:

curl -sL https://app.askditto.io/scripts/free-tier-auth.sh | bash

This opens your browser for Google authentication and returns an API key. Free keys (prefixed rk_free_) give access to roughly 12 shared personas. Paid keys unlock custom demographic filtering and larger panels.

4. Natural Language Prompts

Once installed, you just talk to Claude Code:

'Validate whether there's a market for an AI-powered recipe app for people with dietary restrictions. Test with 10 US adults aged 25-45.'

'Run a pricing study for my project management SaaS. Test sensitivity between $9/month and $49/month with 10 tech professionals.'

'Test these three taglines with 10 Canadian millennials and tell me which wins.'

'Do competitive intelligence on meal kit delivery services. What makes people choose one over others?'

Claude Code reads the skill, designs appropriate questions, executes the research, and returns findings with a shareable link. You never touch the API directly.

What This Changes for Product Teams

The implication isn't just 'research is faster now.' It's that research can become an operational tool rather than a strategic project.

Consider the decisions your team made in the last quarter. How many were validated against customer input versus informed by stakeholder opinion? If the ratio skews heavily toward opinion, the bottleneck was almost certainly time and cost, not willingness.

When research takes 30 minutes and costs a fraction of a focus group, the calculation changes:

Pricing decisions can be tested before you commit, not after you ship

Feature prioritisation can incorporate actual customer preferences, not just sales team anecdotes

Positioning can be validated with target customers before you redesign the marketing site

Competitive intelligence can surface why customers chose alternatives, not just what alternatives exist

Objection handling can address real barriers identified by research, not hypothesised barriers from internal brainstorms

The teams that figure this out first will ship products that actually match customer needs. The teams that don't will continue optimising for the loudest voice in the room.

The Question Worth Asking

I've spent enough time with product teams to know the response this will generate: 'Synthetic personas aren't real customers.' And that's true. They're AI-powered approximations calibrated to demographic and behavioural data.

But here's the question worth asking: What are you comparing them to? If the alternative is comprehensive human research that takes 6 weeks and costs £40,000, then yes, synthetic research is a compromise. If the alternative is no research at all, which is what happens 90% of the time, then synthetic research is a massive improvement.

The realistic baseline isn't 'perfect research.' It's 'stakeholder opinions and competitive benchmarking.' Against that baseline, 10 synthetic personas answering 7 validated questions about your specific product is categorically more useful.

The tools exist. The skill is published. The free tier is available. The only remaining variable is whether you'll make decisions based on evidence or continue making them based on whoever spoke loudest in the last meeting.

I know which I'd prefer.

Want to try this yourself? The Ditto product research skill is available at github.com/Ask-Ditto/ditto-product-research-skill. Full documentation is at askditto.io/claude-code.