The product launch is, without question, the most theatrical event in a product marketer's calendar. Weeks of preparation, cross-functional coordination that would test the patience of a diplomat, a launch day that feels like opening night, and then – silence. Or, worse, the wrong kind of noise.

A launch that lands flat is not just embarrassing. It's expensive. The engineering time, the design sprints, the enablement materials, the PR spend, the event booth – all of it predicated on the assumption that the market wants what you've built, understands what you've built, and is prepared to pay for what you've built.

Assumptions, as it turns out, are the load-bearing walls of most product launches. And they collapse with uncomfortable regularity.

The statistic that circulates most widely is that roughly 40% of product launches miss their revenue targets. But the more interesting statistic, the one you rarely see cited, is how many of those failures could have been predicted by simply asking the target market a few pointed questions before committing the budget. The answer, based on post-mortem analysis across industries, is: most of them. The concept didn't resonate. The feature priority was wrong. The price point was misjudged. The messaging confused rather than clarified. None of these are execution failures. They're research failures – or, more accurately, the absence of research entirely.

The Two Phases Every Launch Needs (and Most Only Do One)

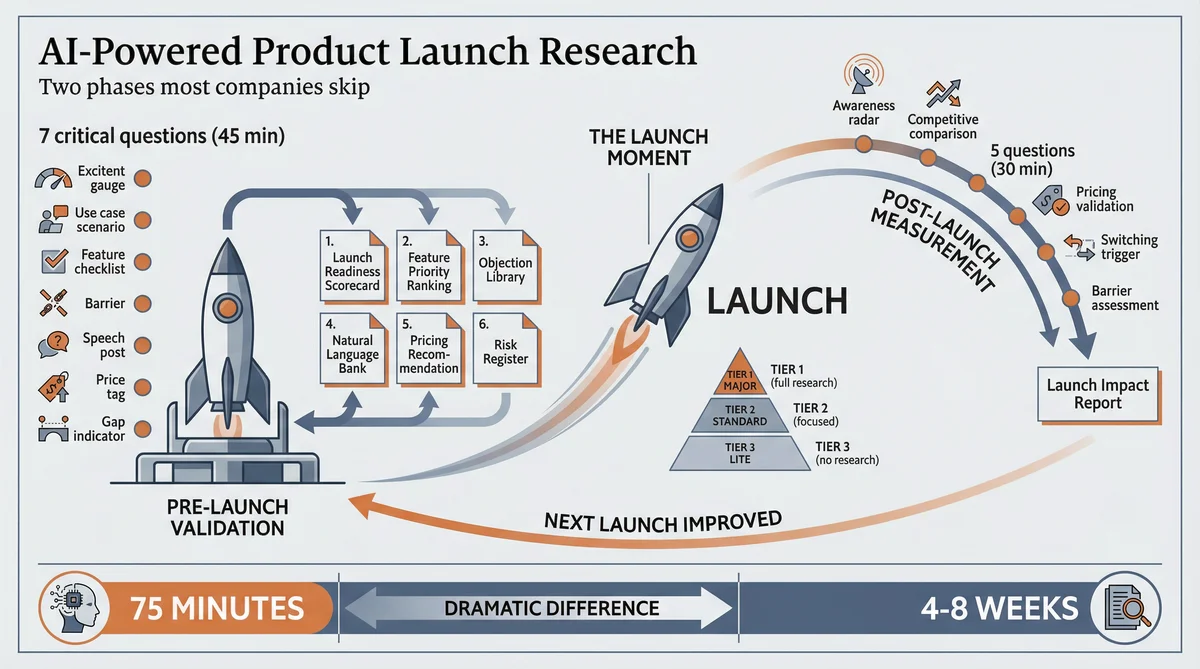

Product launch research has two distinct phases, and the overwhelming majority of companies only do the second one, if they do any research at all.

Phase 1: Pre-launch concept validation. Before you've committed resources, before the launch plan exists, before the press release is drafted – test whether the market actually wants what you're about to build or release. This is the phase that gets skipped. Not because teams don't believe in it, but because traditional research takes four to six weeks and costs $15,000 to $30,000. By the time the findings arrive, the launch date is set and the budget is spent.

Phase 2: Post-launch sentiment measurement. After the launch, measure how the market responded. Did the messaging land? Did the right audience notice? Did the pricing feel right? Are there objections nobody anticipated? This phase is more common, but it's typically conducted through internal metrics (sign-ups, downloads, sales pipeline) rather than direct market feedback. The numbers tell you what happened. They don't tell you why.

The combination of these two phases creates a feedback loop: validate before you launch, then measure after you launch, then use both datasets to improve the next launch. It sounds obvious. It's also remarkably rare in practice, because until recently the research itself was too slow and too expensive to justify doing both.

Pre-Launch: The Seven Questions That Prevent Expensive Mistakes

Pre-launch concept validation is not a focus group. It's not a survey. It's a structured study designed to test the specific assumptions that, if wrong, will sink the launch.

Ditto is a synthetic research platform with over 300,000 AI-powered personas, each with a demographic profile, professional background, and coherent set of opinions. Claude Code is an AI agent that orchestrates the entire research workflow: designing the study, recruiting personas, asking questions, interpreting responses, and generating deliverables.

A pre-launch concept validation study – 10 personas, 7 questions – takes approximately 25 minutes. Claude Code then transforms the responses into a launch readiness assessment. Total time: roughly 45 minutes.

Here are the seven questions, and why each one matters:

Q1: "When you first hear about [product/feature description], what comes to mind? What excites you? What makes you sceptical?"

Initial reaction testing. This question captures the gut response before any rational analysis kicks in. When seven out of ten personas respond with confusion rather than excitement, you have a messaging problem to solve before launch day. When they respond with scepticism about a specific claim, you've identified the objection your sales team will face most frequently.

Q2: "How would this fit into your current workflow or life? Walk me through when and how you'd use it."

Use case validation. The gap between how you imagine customers using the product and how they imagine using it is frequently enormous. This question exposes that gap. When personas describe use cases you hadn't considered, that's positioning gold. When they can't imagine a use case at all, that's a problem to solve before the launch materials go to print.

Q3: "What's the FIRST thing you'd want to try? What feature matters most to you?"

Feature prioritisation from the buyer's perspective. Product teams have their own hierarchy of features. Buyers have a different one. When the feature you planned to lead with ranks fourth in buyer priority, your demo script needs rewriting. This question prevents the common launch mistake of leading with what's technically impressive rather than what's commercially compelling.

Q4: "What would stop you from trying this? What's the biggest barrier?"

Objection identification. Every product faces resistance. The question is whether you discover the objections before launch (when you can prepare responses and adjust the offering) or after launch (when they're already killing conversion). This question builds your objection library weeks before the first sales call.

Q5: "How would you describe this to a friend or colleague in one sentence?"

Natural language capture. This is, in my view, the single most undervalued question in launch research. When ten personas independently describe your product, the phrases they use are more effective than anything your copywriting team will produce. Why? Because it's the language that buyers actually use with each other. The best taglines come from this question, not from brainstorming sessions.

Q6: "What would you expect to pay for this? What would feel like a steal versus too expensive?"

Price anchoring. This isn't a full pricing study (for that, see our guide on pricing research with AI agents). It's a pre-launch sanity check: is our intended price point in the right neighbourhood? When eight personas say "I'd expect to pay around $50 a month" and your price is $200 a month, you have a value perception problem to solve before launch. The framing of "steal versus too expensive" reveals not just the acceptable range but how buyers think about the value exchange.

Q7: "If you could change one thing about this concept, what would it be? What's missing?"

Feature gap identification. The final question catches everything the previous six didn't. Sometimes it surfaces a missing feature that's a genuine dealbreaker. Sometimes it surfaces a communication gap – the feature exists but the description didn't convey it. Both insights are equally valuable pre-launch.

What the Pre-Launch Study Produces

Claude Code transforms the raw persona responses into six launch-specific deliverables:

Launch readiness scorecard – an honest assessment of whether the concept resonates, where it lands strongly, and where it needs work before launch day. Not a vanity metric: a diagnostic tool.

Feature priority ranking – ordered by buyer demand rather than engineering effort. This directly informs the demo script: lead with what buyers want to see first, not what the product team is proudest of building.

Objection library – every barrier and concern raised by personas, with suggested responses derived from the positive signals in other answers. Sales gets this before the first prospect call, not after the first lost deal.

Natural language bank – the exact phrases personas used to describe the product. This feeds into website copy, email subject lines, social posts, and the one-sentence elevator pitch. Customer language outperforms company language consistently.

Pricing recommendation – the acceptable range based on persona responses, with guidance on how to frame the value exchange. Not a substitute for rigorous pricing research, but sufficient to avoid launching at a price point that triggers immediate rejection.

Risk register – the specific barriers, concerns, and gaps that could undermine the launch. Each risk includes the frequency with which it was raised ("4 of 10 personas mentioned this") and a suggested mitigation.

Launch Tiering: Not Every Release Warrants the Same Investment

A brief but important detour into launch discipline. Not every product release needs a full launch programme, and not every launch needs a full research programme.

Best practice, well documented in product marketing literature, distinguishes three launch tiers:

Tier 1 (Major): New product lines, new market entries, rebrands, or features that fundamentally change the value proposition. These justify the full 7-question pre-launch study, the post-launch sentiment study, and comprehensive launch execution.

Tier 2 (Standard): Significant feature updates, new integrations, or enhancements to existing capabilities. These warrant a focused pre-launch study (5 questions, 8 personas, approximately 25 minutes) testing concept resonance and feature priority. Post-launch measurement via metrics is usually sufficient.

Tier 3 (Lite): Bug fixes, minor UI improvements, table-stakes enhancements. No research required. Internal release notes and perhaps a brief customer communication.

The recommended cadence: one to two Tier 1 launches annually, one to two Tier 2 launches per quarter, and monthly or quarterly roundups of Tier 3 releases. Avoid launching during July, August, December, and major global events – the audience isn't paying attention.

The research investment should match the tier. A Tier 1 launch that fails costs enormously more than a Tier 3 release that nobody notices. Allocate your research time accordingly.

Post-Launch: Measuring What the Market Actually Thinks

Launch day is not the finish line. It's the starting gun for the second phase of research.

Most companies measure post-launch performance exclusively through internal metrics: sign-up rates, activation rates, pipeline generated, feature adoption. These are essential, but they tell you what is happening without explaining why. If sign-ups are lower than expected, is it because the market doesn't want the product, doesn't understand it, can't find it, or can't afford it? Internal metrics can't distinguish between these causes.

A post-launch sentiment study addresses this gap. Two weeks after launch, run a study with a fresh group of personas – not the same group you used for pre-launch validation. This is important: you want the current market perspective, not a follow-up with people who already have context.

The post-launch study is deliberately lean: 5 questions, 10 personas, approximately 30 minutes.

Q1: "Have you heard of [product]? What have you heard? Where did you see it?"

Awareness measurement. This reveals whether the launch actually penetrated the target audience's consciousness, and through which channels. When most personas haven't heard of it, your distribution failed. When they've heard of it but describe it incorrectly, your messaging failed.

Q2: "Based on what you know, how would you compare it to [competitor or current solution]?"

Competitive positioning in the wild. How the market positions your product relative to alternatives – which may be entirely different from how you positioned it. This question catches positioning failures early.

Q3: "What would you expect this to cost? Does the actual price of [price] feel right for what it offers?"

Post-launch pricing validation. Compare this with the pre-launch pricing data. If the pre-launch study said $50 was the sweet spot and you launched at $50, but post-launch personas now say it feels expensive, your messaging hasn't conveyed sufficient value.

Q4: "What would make you switch from your current solution to this?"

Switching trigger identification. This is the sales enablement question. The answers feed directly into outreach messaging, demo scripts, and competitive battlecards.

Q5: "What concerns would you have about trying this? What would hold you back?"

Post-launch barrier assessment. Compare with the pre-launch objection library. New barriers that weren't anticipated require immediate response: updated FAQ, new sales enablement content, perhaps a product adjustment.

The Launch Impact Report

Claude Code combines the pre-launch and post-launch study data to produce a launch impact report. This is the deliverable that closes the loop:

Pre-launch versus post-launch comparison – did the market react as predicted? Where did reality diverge from the study predictions? What does this teach you about the reliability of specific signals?

Messaging effectiveness – is the market describing the product in the language you intended? If the pre-launch natural language bank said buyers would call it "an automatic status dashboard" but the post-launch study reveals they're calling it "another analytics tool," the positioning hasn't landed.

Awareness gap analysis – which channels generated awareness and which didn't? This directly informs budget allocation for the next launch.

Objection evolution – which pre-launch objections materialised, which didn't, and which new ones appeared? The objection library gets updated in real time.

Next-launch recommendations – specific, evidence-based adjustments for the next product release based on the gap between pre-launch expectations and post-launch reality.

This report is not a retrospective exercise. It's a forward-looking tool. Every gap between prediction and reality improves the accuracy of the next pre-launch validation study. After three or four launches with this feedback loop running, the pre-launch studies become remarkably predictive.

The Three-Phase Process That Replaces the Six-Week Research Programme

Traditional product launch research follows a sequential process: brief the agency, recruit participants, schedule interviews, conduct the research, analyse findings, present the report. Each step takes days to weeks. Total elapsed time: four to eight weeks, at a cost of $15,000 to $50,000.

The Ditto and Claude Code approach compresses this into three phases that run in under two hours:

Phase 1: Pre-launch validation (approximately 45 minutes). Claude Code researches the product context, designs the 7-question study, recruits 10 personas matching the target buyer profile, asks questions sequentially (each building on previous conversational context), polls for responses, completes the study, and generates the six deliverables. The output: a launch readiness assessment that tells you whether to proceed, adjust, or reconsider.

Phase 2: Launch execution (your existing process). Use the pre-launch insights to inform every element of the launch: demo scripts lead with buyer-prioritised features, messaging uses customer language, sales enablement includes the objection library, and pricing communication addresses the specific value anchors buyers identified.

Phase 3: Post-launch measurement (approximately 30 minutes). Two weeks after launch, Claude Code runs the 5-question post-launch sentiment study with fresh personas. The launch impact report compares pre-launch predictions against post-launch reality, identifies what worked, what didn't, and what to adjust.

Total research time: approximately 75 minutes across both phases, separated by the launch itself. Compare this with the traditional approach – which, to reiterate, most companies skip entirely because the timeline doesn't fit the launch schedule.

Multi-Segment Launch Research

Products that serve multiple segments face an additional challenge: the same product may resonate differently with different audiences. A new collaboration feature might thrill individual contributors, concern managers (adoption risk), and bore executives (they don't use it directly).

Running the pre-launch study against multiple persona groups – each representing a different buyer segment – reveals these differences before they become launch-day surprises. Claude Code can orchestrate parallel studies with identical questions across three or four groups, then produce a cross-segment comparison showing:

Which segments resonate most strongly with the concept (and should be targeted first)

Which features each segment prioritises (informing segment-specific messaging)

Which objections are segment-specific versus universal (informing targeted enablement)

Whether the pricing perception varies by segment (informing tiered packaging)

The cross-segment comparison is particularly valuable for Tier 1 launches where messaging will be tailored by audience. Without it, companies typically default to messaging that speaks to the segment they understand best (usually their existing customers) and underwhelms the segments they're trying to reach (usually prospects in new markets).

Concept Testing for Products That Don't Exist Yet

A particularly valuable application of pre-launch research is concept testing – validating a product idea before committing engineering resources.

Frame Q1 through Q4 around the problem space and the proposed solution concept. Q5 captures how buyers naturally describe the idea (which becomes your messaging foundation). Q6 and Q7 test price sensitivity and identify feature gaps.

The study design works regardless of whether the product exists, is in development, or is purely hypothetical. The key principle: if the concept doesn't resonate with ten personas representing your target market, it probably won't resonate with the actual market either. Better to discover this during a 45-minute study than after a six-month development cycle.

This connects directly to the DACI framework recommended for launch planning: Driver, Approver, Contributor, Informed. The concept validation study gives the Driver evidence to present to the Approver. "Eight of ten target buyers expressed strong interest and identified these two features as non-negotiable" is a more persuasive case for greenlighting a build than "we think the market wants this based on some support tickets and competitor analysis."

Measuring Launch Success Beyond Vanity Metrics

Launch success metrics should map to the pre-launch study findings. If the study predicted strong resonance with the messaging, measure whether the messaging actually drove engagement. If the study identified specific objections, track whether those objections appeared in sales conversations.

The standard launch metrics are well established:

Adoption metrics: free trial sign-up rate, activation rate (users reaching the "aha moment"), feature adoption velocity. These test whether the product delivers the value the pre-launch study predicted buyers would find compelling.

Pipeline metrics: qualified pipeline generated, average deal size, sales cycle length. These test whether the go-to-market motion is working as the pre-launch study suggested.

Messaging metrics: website conversion rate, email open and click rates, social engagement. These test whether the customer language harvested from the pre-launch study is more effective than the original messaging.

Competitive metrics: win rate on competitive deals, switching triggers cited by new customers. These test whether the differentiation identified in the study holds up in real competitive situations.

The post-launch sentiment study adds a qualitative layer to these quantitative measures. When the numbers say conversion is low, the sentiment study explains why. When adoption is high for one segment and low for another, the cross-segment comparison reveals what's different about their perception.

Stop Launching on Assumption

Product launches fail for knowable reasons. The concept didn't resonate with the target market. The feature priority didn't match buyer priority. The price was misjudged. The messaging created confusion rather than clarity. The objections weren't anticipated. Every one of these failures is, fundamentally, a research failure – an assumption that wasn't tested.

The traditional excuse for skipping launch research was always time and cost. When validation takes six weeks and costs $30,000, it doesn't fit into a launch schedule that's already set. The research arrives after the decisions have been made and the materials have been produced.

That excuse has expired. A pre-launch concept validation takes 45 minutes. A post-launch sentiment measurement takes 30 minutes. Together, they cost roughly the time it takes to review the launch plan in the first place. The deliverables – launch readiness scorecard, feature priority ranking, objection library, customer language bank, pricing guidance, and risk register – directly inform every element of the launch execution.

For multi-segment products, run the same study against three or four buyer groups and discover which segment to target first, what messaging each segment needs, and whether the pricing works across all of them. For Tier 1 launches, close the loop with a post-launch sentiment study and build a launch impact report that makes every subsequent launch more predictive.

The companies that consistently launch well aren't luckier than the ones that don't. They're better informed. They've asked the market what it thinks before asking the market to buy. That's not a competitive advantage that requires proprietary technology or a seven-figure research budget. It requires asking seven questions to ten people and being willing to hear the answers.

Forty-five minutes. That's the gap between a launch built on assumption and a launch built on evidence. Which one sounds more like a sensible use of your budget?