Every product marketer knows the feeling. You have spent three weeks crafting a positioning statement. It is sharp, differentiated, and internally persuasive. The leadership team loves it. The head of product nods approvingly. Someone says "this really captures who we are." Then you put it in front of actual customers, and the silence is deafening. Or worse, you never put it in front of customers at all, because by the time you could organise the research, the launch window had already closed.

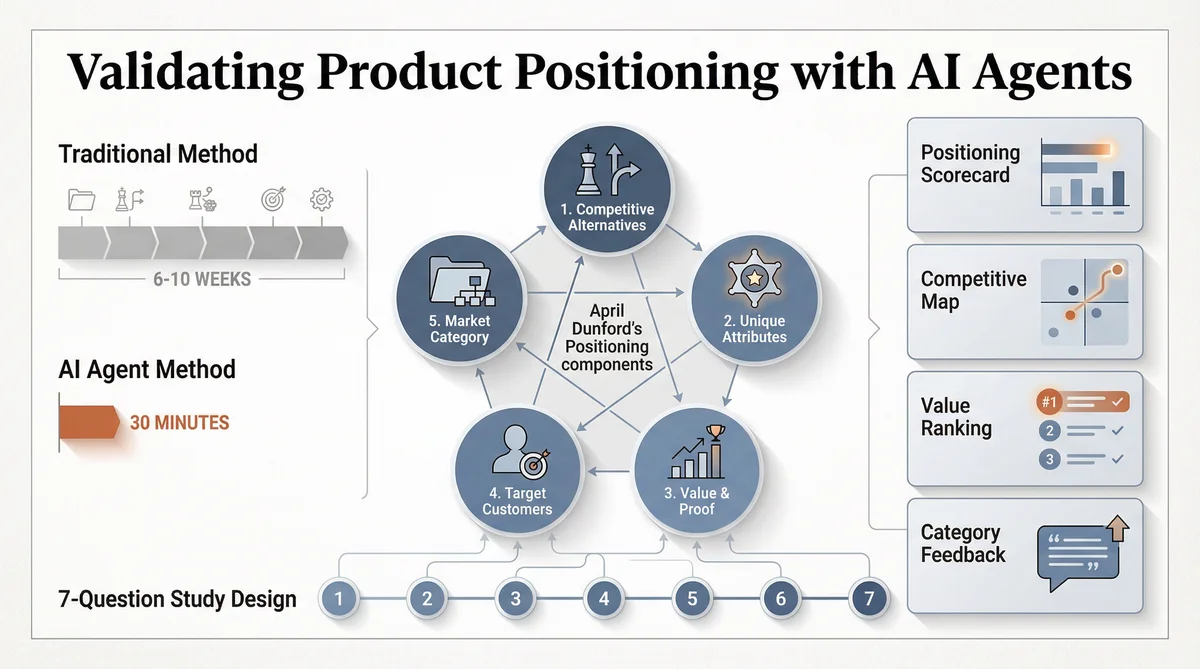

Positioning validation is the most important step that most product marketing teams skip. Not because they do not believe in it, but because the traditional process for conducting it, which involves agency briefings, participant recruitment, interviews, and analysis, takes six to ten weeks and costs upwards of fifteen thousand dollars. That is an extraordinary amount of time and money to discover that your carefully crafted positioning does not land with the people who matter.

This article explains how to validate product positioning in approximately thirty minutes using two AI-powered tools: Ditto, a synthetic market research platform with over 300,000 AI personas, and Claude Code, Anthropic's agentic development environment. Together, they transform positioning validation from a quarterly event into a continuous habit. This is not a theoretical exercise. It is a practical, step-by-step workflow that maps directly to the most respected positioning framework in the discipline.

Why Positioning Validation Matters More Than Positioning Itself

April Dunford, author of Obviously Awesome and the foremost authority on B2B positioning, identifies five interdependent components that constitute effective positioning:

Competitive Alternatives: what customers would do if your product did not exist

Unique Attributes: the capabilities that genuinely differentiate you from those alternatives

Value and Proof: the demonstrable outcome of those attributes, with evidence

Target Customers: the specific segment for whom your value is most compelling

Market Category: the frame of reference that makes your value obvious to buyers

The framework is elegant. It is also widely misapplied. Most teams treat positioning as a creative exercise: draft a statement, debate it in a room full of colleagues, pick the one that sounds best, and move on. The problem is that none of the people in that room are the customer. Your head of engineering knows what makes the product technically distinctive. Your CEO knows the vision. Your head of sales knows what prospects ask about. But none of them can reliably predict how a buyer who has never heard of you will react to your positioning when they encounter it for the first time.

That prediction requires validation. And validation requires putting your positioning in front of people who match your ideal customer profile and observing how they respond. Do they understand what you do? Can they articulate your value back to you? Do they categorise you correctly? Do they see you as genuinely different from the alternatives? These are empirical questions. They cannot be answered by internal debate.

Per the 2025 State of Product Marketing Report, 91% of product marketers identify positioning and messaging as their core responsibility. Yet the vast majority of positioning work is never validated with customers before it ships. The frameworks exist. The evidence to feed them does not arrive in time.

The Traditional Approach: Why Most Teams Skip It

The standard process for validating positioning through primary research looks something like this:

Brief a research agency or schedule customer interviews (1 to 2 weeks)

Recruit participants matching your ICP (2 to 4 weeks)

Conduct interviews or run the survey (1 to 2 weeks)

Analyse results and synthesise findings (1 week)

Present to stakeholders and iterate (1 week)

Total elapsed time: six to ten weeks. Total cost: ten to thirty thousand dollars, depending on methodology and agency. And that is for a single round of validation on a single strategic question. If the research reveals that your positioning needs revision, which it usually does, you are looking at another cycle. Two rounds of positioning validation through traditional means can easily consume an entire quarter.

The consequence is predictable. Teams skip the validation. They go with the positioning that sounds best in the conference room. They launch the messaging that the most senior person in the meeting preferred. They build an entire go-to-market strategy on assumptions about what customers care about, rather than evidence. Then, six months later, when win rates are flat and the sales team is complaining that "the messaging doesn't land," they wonder what went wrong.

What went wrong is that they never tested the foundation. Everything that follows positioning, messaging, sales enablement, competitive battlecards, content strategy, depends on the positioning being correct. If the positioning is wrong, everything built on top of it is wrong too. It is the most expensive shortcut in product marketing.

The AI Agent Approach: Positioning Validation in Thirty Minutes

The combination of Ditto and Claude Code makes it possible to validate positioning against Dunford's full framework in approximately thirty minutes, at a fraction of the traditional cost. Here is how the workflow operates, step by step.

Step 1: Contextual Research

Claude Code begins by researching the product, its competitors, and the market landscape. It reads the company website, analyses competitor positioning, reviews relevant industry coverage, and builds a contextual brief. This is the equivalent of the "desk research" phase that a human researcher would conduct before designing a study, except it happens in roughly two minutes rather than two days.

The output of this step is a research context document that informs every subsequent decision: which demographic filters to apply, which competitors to reference in the study questions, what language the target market uses, and what the current positioning gaps might be.

Step 2: Study Design Mapped to Dunford's Framework

Claude Code then designs a seven-question study where each question maps directly to one or more of Dunford's five positioning components. The questions are open-ended and qualitative, designed to elicit natural language responses rather than scaled ratings. This matters. Positioning validation is not about whether customers rate your value proposition 4.2 out of 5. It is about whether they can articulate what you do, how they categorise you, and what would stop them from buying.

Here is a representative study design for a B2B SaaS product:

Question 1 (Competitive Alternatives): "When you think about [problem space], what comes to mind first? What frustrates you most about the options currently available?"

Question 2 (Status Quo and Workarounds): "Walk me through how you currently solve [problem]. What tools, services, or workarounds do you use? What is missing?"

Question 3 (Unique Attributes and Value): "If I told you there was a product that [unique value proposition], what is your gut reaction? What excites you? What makes you sceptical?"

Question 4 (Market Category): "How would you describe [product] to a colleague? What category would you put it in?"

Question 5 (Competitive Differentiation): "Compared to [competitor A] and [competitor B], what would make you choose a new option? What is the minimum bar?"

Question 6 (Primary Value Driver): "If [product] could only do one thing brilliantly for you, what should that be? Why does that matter more than everything else?"

Question 7 (Adoption Barriers): "What would stop you from trying something like this? What would you need to see or hear to feel confident switching?"

Notice the structure. Questions 1 and 2 establish the competitive landscape from the customer's perspective, not yours. Question 3 tests your value proposition directly, probing for both enthusiasm and scepticism. Question 4 reveals how customers naturally categorise you, which may differ dramatically from how you categorise yourself. Question 5 introduces specific competitors to understand differentiation in context. Question 6 forces prioritisation, revealing the single capability that matters most. Question 7 identifies the barriers you will need to address in your messaging and proof points.

Seven questions. Five positioning components. Every angle covered.

Step 3: Persona Recruitment via Ditto

Claude Code instructs Ditto to recruit ten personas matching the target customer profile. Ditto's synthetic personas are not generic language model outputs. Each is statistically grounded in census data, behavioural research, and cultural context. The platform covers fifteen countries representing 65% of global GDP, with over 300,000 personas in the United States alone. EY Americas has validated a 95% correlation between Ditto's synthetic responses and traditional research methods.

Recruitment filters include country, state, age range, gender, employment status, education level, and parental status. For a B2B positioning study targeting mid-market SaaS buyers in the United States, you might recruit ten personas aged 30 to 50, employed, with a bachelor's degree or higher. Recruitment takes approximately thirty seconds. Compare that to the two to four weeks required to recruit human participants through a research panel.

Step 4: Data Collection

Claude Code submits each question to the Ditto study via API. Responses are collected asynchronously, typically completing within two to four minutes. Each persona provides a qualitative, natural language response that reflects their demographic profile, behavioural patterns, and decision-making tendencies. The responses read like interview transcripts, not survey data.

This is worth emphasising. Traditional positioning validation produces either quantitative survey data ("Rate this statement 1-5") or qualitative interview transcripts. Ditto produces the latter. The responses contain the specific language, objections, comparisons, and emotional reactions that make positioning research genuinely useful. A response to Question 3 might read: "My gut reaction is that it sounds too good to be true. I have heard the AI pitch before and it usually means 'works in the demo, breaks in production.' If you could show me a case study from someone in my industry who actually uses this daily, I would pay attention." That kind of response tells you exactly what your messaging needs to address. A 3.8 out of 5 rating does not.

Step 5: Synthesis and Deliverables

Once all responses are collected, Claude Code triggers Ditto's study completion analysis, which generates an overall summary, identifies key segments within the respondent group, highlights divergences and consensus points, and suggests follow-up questions. Claude Code then synthesises this into a set of positioning deliverables:

Positioning Validation Scorecard: how each of Dunford's five components performed against the target audience, with supporting evidence from persona responses

Competitive Alternative Map: what customers actually do today, which is frequently different from what you assume they do

Value Resonance Ranking: which value propositions landed strongest, which fell flat, and which generated scepticism

Market Category Feedback: how customers naturally describe and categorise you, which may differ significantly from your intended category

Proof Point Gap Analysis: where customers expressed scepticism and what evidence they would need to believe your claims

Quotable Insights: direct persona quotes suitable for internal positioning documents and stakeholder presentations

Total elapsed time from start to finished deliverables: approximately thirty minutes. Total cost: a fraction of a single traditional research engagement.

Advanced: Cross-Segment Positioning Comparison

Positioning rarely lands uniformly across all segments. Enterprise buyers, mid-market teams, and SMBs often respond to the same positioning in dramatically different ways. A value proposition that resonates with a startup CTO may fall entirely flat with an enterprise procurement team.

Claude Code can orchestrate this comparison by running the identical seven-question study against multiple Ditto groups simultaneously: one group matching your SMB ICP, another matching enterprise evaluators, a third matching technical buyers. The output is a segment-by-segment comparison matrix showing exactly where your positioning resonates, where it needs adaptation, and where it fails entirely.

This kind of cross-segment analysis would require three separate research engagements through traditional means, tripling the cost and timeline. With Ditto and Claude Code, it adds approximately twenty minutes to the workflow, because the studies run in parallel.

Iterative Validation: The Real Advantage

The most significant benefit of this workflow is not the speed of a single validation cycle. It is the ability to iterate. When positioning validation takes six weeks, you get one shot. You draft your positioning, test it, and live with whatever the research reveals. There is no time to revise and retest before the launch deadline.

When validation takes thirty minutes, you can run three rounds in a single afternoon. Draft your positioning. Test it. Revise based on the results. Test the revision. Refine again. Test the refinement. By the end of the day, you have a positioning framework that has been validated through sixty persona responses across three iterations, with each round informed by the findings of the previous one. That is more primary research than most companies conduct in a year.

This changes the psychology of positioning work. It moves from a high-stakes, get-it-right-the-first-time exercise to an experimental, evidence-driven process. Product marketers can afford to be bold with their initial hypotheses, because they know the cost of being wrong is thirty minutes, not thirty thousand dollars.

Where Positioning Validation Fits in the PMM Operating System

Positioning is the foundation upon which everything else in product marketing is built. Messaging translates positioning into customer-facing language. Go-to-market strategy operationalises it into channel, motion, and segment decisions. Sales enablement materials encode it into pitch decks, battlecards, and objection handling guides. Content marketing amplifies it across the funnel. Every downstream function inherits the assumptions embedded in your positioning.

Which means that every downstream function inherits the errors in your positioning, too. If your competitive alternative map is wrong, your battlecards will set the wrong landmine questions. If your market category is wrong, your messaging will confuse rather than clarify. If your value proposition does not resonate with your actual target segment, your sales team will struggle to close regardless of how polished the pitch deck is.

Validated positioning is not a nice-to-have. It is the load-bearing wall. And with Ditto and Claude Code, there is no longer any reason to skip it.

When Synthetic Validation Is Not Enough

It would be irresponsible to present this as a complete replacement for human research. Synthetic personas have not used your specific product, so they cannot validate positioning claims rooted in product experience. They have not spoken with your sales team, attended your events, or read your documentation. For positioning that depends on claims about user experience, product quality, or customer service, you will still need feedback from real users.

The recommended approach is hybrid. Use Ditto and Claude Code as the fast first pass. Validate your positioning hypotheses against synthetic personas, identify the strongest candidates, refine through iteration, and arrive at a shortlist that has already survived sixty or more data points. Then invest in a smaller, more targeted round of human validation with real customers, focusing specifically on the areas where synthetic research cannot go: product-specific experience, relationship-driven trust signals, and ultra-niche domain knowledge.

This approach typically reduces traditional research spend by sixty to eighty percent whilst increasing the total volume of validation evidence by five to ten times. You get more data, faster, at lower cost, with human research reserved for the questions that genuinely require it.

Getting Started

If you are a product marketer who has read Obviously Awesome, nodded along to Dunford's framework, and then quietly shelved it because validating five interdependent positioning components with real customers seemed impractical, this workflow exists for you.

The tools are available today. Ditto provides the synthetic research panel. Claude Code orchestrates the entire workflow, from research to study design to insight synthesis. The seven questions above will work for most B2B and B2C positioning studies with minimal modification. The first validation will take approximately thirty minutes. The second, now that you know the workflow, will take twenty.

Product positioning is not a creative exercise. It is an empirical question with a testable answer. The only thing that has changed is how quickly, and cheaply, you can now test it.

This is the second article in a series on using AI agents for product marketing. The first, Using Ditto and Claude Code for Product Marketing, provides a high-level overview of how every PMM discipline maps to these tools. Future articles will cover messaging testing, competitive intelligence automation, pricing research, and the research-to-publication content engine.