The Difference Between Knowing and Understanding

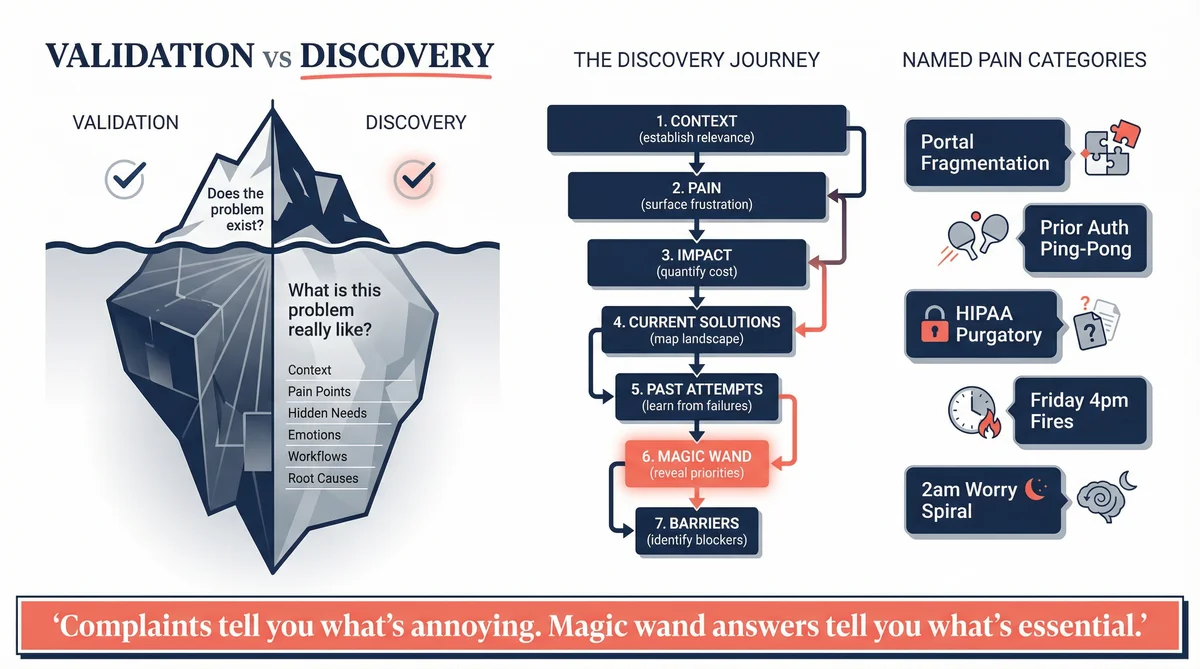

Problem framing tells you that a problem exists. Discovery research tells you what it actually feels like to have that problem.

This distinction matters more than most teams realise. You can validate that "busy professionals struggle to eat healthy" in fifteen minutes. But that validation doesn't tell you when the struggle peaks, what triggers it, what they've already tried, why those attempts failed, or what would actually make them change their behaviour.

Discovery research is the difference between a doctor who confirms you're sick and one who understands exactly what's wrong.

Most teams skip this stage. They validate a problem exists, get excited, and start building. Six months later, they have a product that technically solves the problem but misses something fundamental about how people actually experience it.

That "something fundamental" is what discovery research finds.

What Discovery Research Actually Means

Discovery is about depth. You're not asking whether the problem is real. You already know it's real. You're asking:

How often does this problem occur?

What triggers it?

How do people currently cope?

What have they tried before?

Why did those solutions fail?

What would a solution need to do?

What would prevent them from adopting one?

These questions can't be answered with surveys or rating scales. They require stories. Discovery research is fundamentally qualitative. It invites people to tell you about their experience in their own words, then listens carefully for patterns.

The 7-Question Framework

The non-leading question framework becomes the backbone of discovery research. Each question serves a specific purpose:

1. Establish relevance: "How often do you encounter [problem]? Walk me through a recent example."

2. Surface pain: "What's the most frustrating part of dealing with [problem]?"

3. Quantify impact: "How much time or money does this cost you per week? Per month?"

4. Map current solutions: "What tools or workarounds do you currently use? What works? What doesn't?"

5. Explore past attempts: "Have you tried other solutions? What happened? Why did you stop?"

6. Reveal priorities: "If you could wave a magic wand and fix ONE thing about this, what would it be?"

7. Identify barriers: "What would make you hesitant to try a new solution, even if it promised to help?"

Notice what this framework does: it moves from broad context through emotional reality, current behaviour, ideal state, and adoption constraints. By the end, you understand not just that the problem exists, but how it shows up in daily life.

How to Run Discovery Research in Ditto

Ditto transforms discovery research from a weeks-long interview process into something you can complete in an afternoon. Here's the practical workflow for running discovery studies.

Step 1: Define Your Target Persona

Before creating a study, get specific about who experiences this problem. Vague targeting produces vague insights.

Weak targeting: "People who cook at home"

Strong targeting: "Working parents in urban areas, aged 30-45, who want to cook healthy meals but struggle with time constraints"

In Ditto, you'll translate this into demographic filters when creating your research group. The more precise your filters, the more relevant the responses. Ten highly-targeted personas beat fifty loosely-matched ones.

Step 2: Create Your Research Group

In Ditto, a research group is your panel of synthetic personas. For discovery research, 10-15 personas typically provides enough diversity to surface patterns without overwhelming analysis.

When recruiting your group, consider:

Demographic diversity: Include variation in age, location, and context

Experience levels: Mix people new to the problem with those who've struggled for years

Solution exposure: Include both people who've tried alternatives and those who haven't

The group becomes your "interview panel." Each persona will respond to your questions from their unique perspective, drawing on statistically-grounded characteristics.

Step 3: Write Discovery-Focused Questions

Discovery questions differ from validation questions. You're not testing hypotheses. You're exploring territory.

Validation question: "Would you pay $50/month for a meal planning service?"

Discovery question: "Walk me through what happens when you try to plan healthy meals for your family. What makes it difficult?"

The 7-question framework maps directly to Ditto study questions. Each question should invite narrative responses. Avoid yes/no formats. You want stories, not data points.

Step 4: Run the Study and Collect Responses

Once your questions are set, Ditto's synthetic personas respond asynchronously. Each persona answers from their established perspective, providing the kind of detailed, personal responses you'd get from actual interviews.

As responses come in, look for:

Repeated phrases: When multiple personas use similar language, you've found something real

Emotional intensity: Strong reactions ("I HATE when..." or "This drives me crazy...") signal important pain points

Specific examples: Concrete stories reveal actionable details

Contradictions: When personas disagree, you may have discovered a segment boundary

Step 5: Use Ditto's Completion Analysis

After all responses are collected, Ditto's AI analyses the full dataset and produces structured insights. This is where patterns become visible.

The completion analysis surfaces:

Common themes: Pain points that appear across multiple personas

Quantified impacts: Aggregated time/money costs from Q3 responses

Priority consensus: What the "magic wand" answers reveal about core needs

Adoption barriers: Consolidated concerns from Q7

This analysis does the initial synthesis work, identifying patterns that would take hours to extract manually from interview transcripts.

Step 6: Build Your Pain Taxonomy

The goal of discovery research is a structured understanding of the problem space. Take Ditto's analysis and organise it into a pain taxonomy. Each pain category should include a name, description, frequency, and representative quote.

Your taxonomy should also include quantified impact (time cost, money cost, emotional toll), the magic wand priority (the single thing most personas would fix), and a key insight (the non-obvious understanding that emerged).

This taxonomy becomes your product design foundation. Every feature should address one of these named pain points.

Using Claude Code to Orchestrate Discovery Research

Claude Code can manage the entire discovery research workflow, from study design through analysis. Here's how the integration works.

Automated Study Creation

Describe your target persona and problem domain, and Claude Code will design appropriate demographic filters, craft the 7-question discovery framework tailored to your domain, create the study in Ditto via API, and monitor for response completion.

Real-Time Analysis

As personas respond, Claude Code can extract emerging themes before the study completes, flag particularly insightful quotes for later use, identify contradictions that suggest segment boundaries, and track quantified impacts (time, money, frequency).

Structured Output Generation

Once the study completes, Claude Code synthesises everything into a formatted pain taxonomy with supporting quotes, quantified impact summaries, competitive landscape based on Q4-Q5 responses, adoption barrier checklist from Q7, and recommended next steps for product development.

Claude Code handles the API calls, monitors progress, and delivers structured insights ready for product decisions.

Case Study: CareQuarter's Discovery Journey

CareQuarter had already validated their problem in Phase 1. Adult children managing ageing parents experienced real pain. The hypothesis was confirmed. But what did that pain actually look like? Phase 1's discovery research went deeper.

The Pain Taxonomy That Emerged

When 12 synthetic personas described their experience as family caregivers, five distinct pain categories emerged:

1. Portal Fragmentation

Every provider uses a different system. The pharmacy has one portal. The primary care doctor has another. The specialist has a third. The insurance company has a fourth. There's no integration. The family caregiver becomes what one participant called "the human API."

"I'm the one copying information between systems. I'm the one who remembers what the cardiologist said and tells the primary care doctor."

2. Prior Authorisation Ping-Pong

Insurance requires prior authorisation. The provider submits it. Insurance says they never received it. Provider says they sent it. Meanwhile, the treatment is delayed, and the caregiver is the only one following up.

"I've spent entire afternoons on hold, being transferred between the insurance company and the doctor's office, each blaming the other."

3. HIPAA Purgatory

The legal authorisation exists. It was signed, notarised, and submitted. But it's not visible in the provider's system. The caregiver shows up to an appointment with their parent and is treated as a stranger.

"I have power of attorney. I have the HIPAA form. And yet every new nurse acts like I'm some random person off the street."

4. Friday 4pm Fires

Hospital discharge decisions happen at the worst possible moments. Friday afternoon. Before a holiday weekend. When nothing can be arranged.

"They called at 4:30 on a Friday to say my mother was being discharged Monday morning. That's 60 hours to find home care, arrange transport, and stock her apartment with supplies."

5. The 2am Worry Spiral

Even when nothing is actively going wrong, caregivers carry persistent background anxiety about what they might be missing.

"I wake up at 2am wondering if I forgot to refill a prescription, or if that symptom she mentioned was actually serious."

What This Changed

This pain taxonomy shaped CareQuarter's entire product design:

Portal fragmentation led to a unified care record maintained by the coordinator

Prior auth ping-pong led to a dedicated insurance liaison function

HIPAA purgatory led to proactive authorisation management

Friday 4pm fires led to the Crisis Response tier (highest-demand offering)

2am worry spiral led to weekly status reports that give caregivers peace of mind

Without discovery research, CareQuarter might have built a generic "care coordination platform." With it, they built something that addressed specific, named pain points.

View the CareQuarter Phase 1 study

Case Study: The Auto Parts Discovery

An auto parts sourcing startup ran discovery research with 10 synthetic personas representing auto service professionals. They wanted to understand the parts sourcing problem deeply before building anything.

What the Research Revealed

Frequency was higher than expected:

One maintenance technician put it simply: "Every day. Almost every job needs a part." This wasn't occasional inconvenience. Parts sourcing was a daily, central activity that directly affected shop productivity.

The cost was quantifiable:

A former auto parts salesman provided a precise estimate: "$400-$800 per week in lost profit" from sourcing delays and errors. This gave the startup concrete ROI figures for their pitch.

The real pain wasn't speed:

The founders had assumed slow parts search was the core problem. The research revealed something different. The number one frustration was "ghost inventory." Parts listed as available that weren't actually in stock.

"I'll find a part, it shows available, I place the order, then get a call an hour later saying they don't actually have it. Now I've wasted time and the customer is waiting."

ETAs mattered more than anything:

When asked the magic wand question, the consensus wasn't "faster search." It was: "Real, guaranteed ETAs with tracking at quote time, VIN-locked."

The startup learned their product wasn't about speed. It was about trust. The ability to quote a customer with confidence that the part would arrive when promised.

Why Previous Tools Failed

Discovery research also revealed why participants had abandoned other solutions:

Fake inventory: Parts listed as available that weren't

VIN split errors: Wrong part recommended for the vehicle

Clunky UX: Apps that crashed or required too many steps

No integration: Didn't connect to shop management systems

These abandonment reasons became the startup's competitive checklist. Every feature had to address one of these specific failure modes.

Case Study: Elder Care Communication Discovery

A startup exploring elder care communication tools ran discovery research with 20 healthcare workers in nursing and care facilities.

The Fundamental Insight

The current system in most facilities is a binary call button. The resident presses it. A light goes on. Someone eventually responds. But the responder has no idea what the resident needs.

This leads to a cascade of problems:

Cognitive decline makes articulation impossible: Many residents can't explain what they need when staff arrive. They pressed the button because something was wrong, but they can't describe what.

Staff waste time on triage: Every call button response becomes a diagnostic exercise. Is it pain? Bathroom? Thirst? Loneliness? Staff have no way to prioritise.

Family communication is a time sink: Families call constantly asking for updates. Staff repeat the same information over and over.

The Magic Wand Response

When asked what would transform their work, healthcare staff had a clear answer: "Context before arrival."

"I want to know what I'm walking into. Bathroom, pain, water, lonely. Just the basics. So I can bring the right thing, bring the right attitude, and not waste time figuring it out."

This insight completely reframed the product concept. The startup wasn't building a "better call button." They were building a context layer.

What Makes Discovery Different from Validation

Validation asks: "Does this problem exist?"

Discovery asks: "What is this problem really like?"

The distinction matters because products built on validation alone tend to be generic. They solve a category of problem rather than a specific experience of it.

Consider the difference:

Validation-based insight: "Family caregivers are stressed."

Discovery-based insight: "Family caregivers experience five distinct types of stress: portal fragmentation, prior auth ping-pong, HIPAA purgatory, Friday 4pm fires, and the 2am worry spiral. The Friday 4pm fires trigger the strongest emotional response and the highest willingness to pay for help."

The first insight could lead to a meditation app. The second leads to a crisis response service with dedicated hospital discharge support.

The Patterns That Emerge

Across multiple discovery studies, certain patterns appear consistently:

1. Quantified Pain Is More Actionable

When participants provide specific numbers, product decisions become easier. "$400-800/week in lost profit" tells you how much value you can capture. "10-20 hours per week on sourcing" tells you how much time you can save.

Push for specifics. "A lot of time" is less useful than "about 15 hours a week."

2. Stories Reveal What Surveys Hide

Rating scales flatten experience. Stories reveal texture. A survey might tell you that users rate "difficulty" at 4.2/5. A story tells you the specific sequence of events, emotional moments, and friction points.

3. Past Failures Predict Future Requirements

When you understand why people abandoned previous solutions, you know what your solution must avoid. Every past failure is a product requirement.

4. The Magic Wand Reveals Priorities

When you ask people to fix "one thing," they tell you what matters most. This is often different from what they complained about longest. Complaints tell you what's annoying. Magic wand answers tell you what's essential.

Interpreting Ditto's Discovery Outputs

When you run discovery research in Ditto, the platform generates structured insights. Here's how to read them effectively.

Strong Discovery Signals

These indicate you've found something product-worthy:

Consensus pain: 8+ of 10 personas mention the same frustration

Quantified impact: Specific dollar or time figures appear consistently

Emotional language: Words like "hate," "nightmare," "drives me crazy"

Detailed stories: Personas provide step-by-step accounts of their struggles

Clear magic wand: Most personas converge on a similar priority

Weak Discovery Signals

These suggest you need to refine your targeting or questions:

Vague complaints: "It's kind of annoying sometimes"

No quantification: Personas can't estimate time or money impact

Contradictory priorities: Every persona wants something different

Solution-jumping: Personas describe solutions, not problems

Low emotional charge: Responses feel detached or theoretical

What to Do with Mixed Signals

Mixed signals often indicate segment boundaries. If half your personas prioritise speed and half prioritise accuracy, you may have discovered two distinct user types.

Return to Ditto and run targeted studies on each segment. "Auto shop owners who prioritise speed" and "Auto shop owners who prioritise accuracy" may reveal completely different pain taxonomies.

Common Discovery Mistakes

Mistake 1: Asking Leading Questions

"Don't you find it frustrating when [specific problem]?" leads the witness. Ask open-ended questions instead.

Mistake 2: Stopping at Surface Answers

When someone says "the process is frustrating," probe deeper. "Can you tell me about a specific time?"

Mistake 3: Ignoring Contradictions

When participants contradict each other, there's usually a segment difference or context factor you haven't identified.

Mistake 4: Treating All Feedback Equally

Pain mentioned by every participant with high emotional charge is more important than pain mentioned once in passing.

Mistake 5: Skipping the Adoption Question

Always ask Q7: "What would make you hesitant to try a new solution?" This surfaces trust requirements and dealbreakers.

What Good Output Looks Like

At the end of discovery research, you should have:

A pain taxonomy: Named categories of pain with supporting quotes

Quantified impact: Specific numbers for time cost, money cost, frequency, and severity

Competitive landscape: What people currently use, what works, and why previous solutions failed

Magic wand priorities: The single thing participants would fix if they could

Adoption barriers: What would prevent people from switching

Representative quotes: Verbatim language that captures emotional reality

How This Feeds Into the Next Stage

Discovery research produces rich, qualitative understanding. The next stage, User Segmentation, takes this understanding and asks: "Are there distinct groups of users who experience this problem differently?"

You might discover that some auto shops prioritise speed while others prioritise accuracy. Some caregivers handle routine coordination while others face crisis situations. These segment differences inform product tiering, marketing messaging, and go-to-market strategy.

Discovery gives you depth. Segmentation gives you structure.

Explore the Research

The study referenced in this article is publicly available:

CareQuarter Phase 1: Pain Discovery - 12 personas on elder care coordination pain points

Ready to run discovery research on your problem space? Learn more at askditto.io