Something peculiar happened in software engineering over the past two years. Developers got superpowers. The rest of us watched.

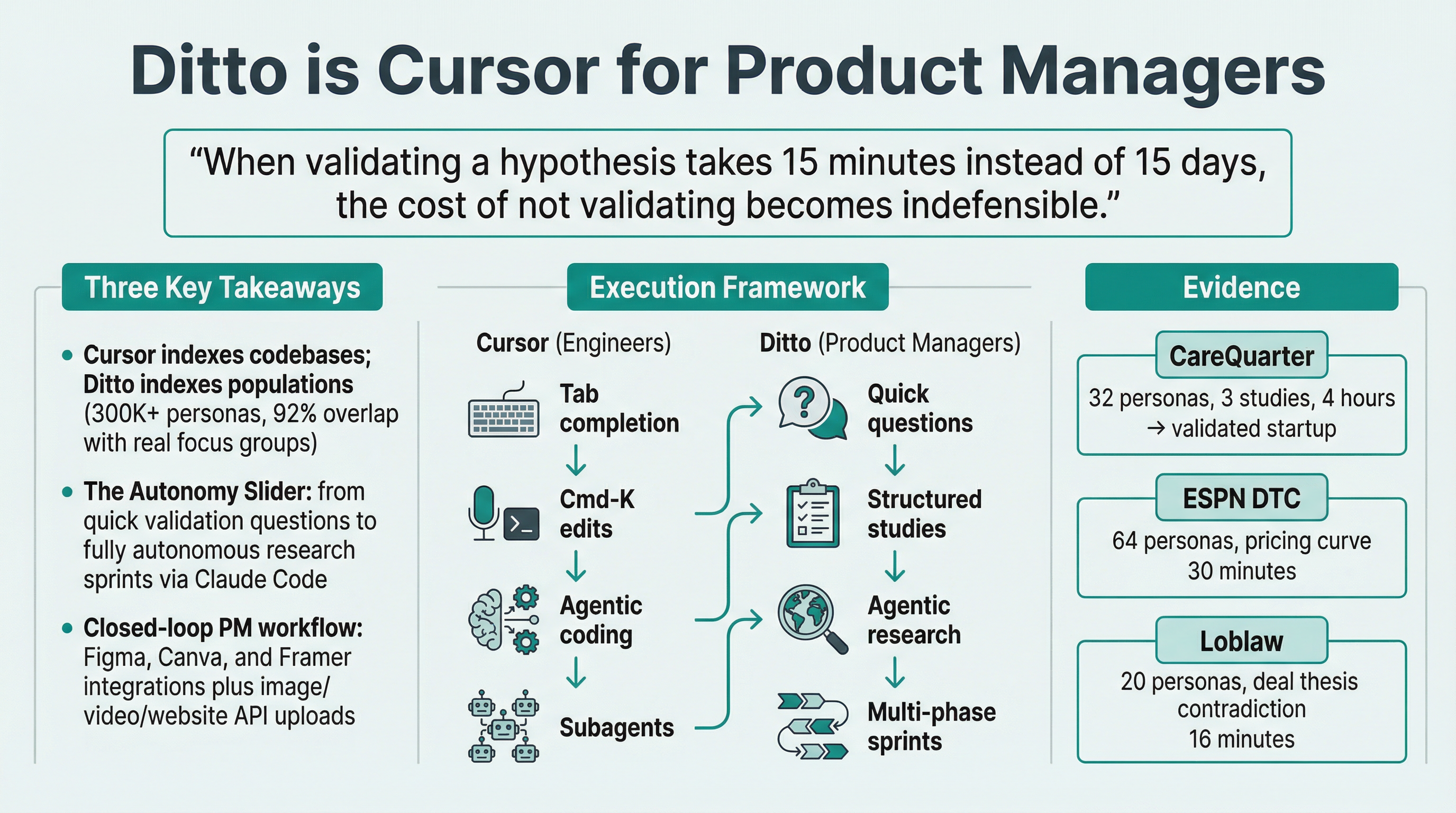

Cursor, the AI-first code editor that's consumed roughly half the Fortune 500, fundamentally changed what it means to write software. Not by being a better autocomplete. By understanding codebases the way a senior engineer does: holistically, contextually, with the ability to make changes across dozens of files simultaneously while holding the entire architecture in mind.

The result was predictable. Engineering velocity went through the roof. Pull request review times increased 91%. Teams merged 1.7x more issues. Code shipped faster, with fewer bugs, by smaller teams.

And then something awkward happened. Everyone looked at the product manager.

The PM Bottleneck

Here's the uncomfortable reality. AI-augmented engineering teams can now build features faster than product managers can validate whether those features should exist. The development cycle compressed. The research cycle didn't.

If you're a PM at a B2C tech company in 2026, your typical week still involves some combination of: guessing what customers want based on a handful of interviews you ran three months ago, arguing about pricing in a conference room where the loudest voice wins, testing positioning by looking at what your competitors do and vaguely approximating it, and making feature prioritisation decisions based on "product instinct", which, if we're being honest, is often just recency bias dressed up in a Miro board.

Traditional user research costs £10,000–50,000 per study and takes 4–8 weeks. By the time you've recruited participants, scheduled sessions, conducted interviews, transcribed recordings, and synthesised findings, the engineering team has already built and shipped the thing you were trying to validate.

The toolchain is the problem. Engineers got Cursor. Product managers got... another Notion template.

What Cursor Actually Does

For non-engineers reading this, a brief explanation of why Cursor matters.

Before Cursor, writing software was a largely manual process augmented by increasingly capable but fundamentally passive tools. Your IDE could highlight syntax errors and suggest autocomplete. GitHub Copilot could finish the line you'd started typing. Useful, but incremental.

Cursor changed the paradigm. It's not a tool that helps you code. It's a tool that codes with you. The critical innovations:

Codebase understanding. Cursor indexes your entire project, every file, every function, every dependency, and holds that context while working. It doesn't just see the line you're editing. It sees the architecture.

The autonomy slider. You choose how much independence to give the AI. Tab completion for small edits. Command-K for targeted changes. Or full agentic mode, where Cursor plans the approach, edits multiple files, runs tests, and iterates, all from a single prompt.

Subagents. Since 2.0, Cursor runs specialised agents in parallel. One agent researches your codebase while another executes terminal commands while a third handles a parallel refactoring task. The developer manages a small team of AI collaborators rather than doing everything sequentially.

Plan mode. Before writing code, Cursor designs the approach. It asks clarifying questions, identifies risks, and proposes a strategy. Then, and only then, it executes.

The net effect: a developer using Cursor operates at a fundamentally different level than one without it. Not because they type faster. Because the entire feedback loop, understand, design, implement, test, collapsed from days into minutes.

Now Replace "Code" with "Customer Research"

This is where it gets interesting.

Ditto does for product managers what Cursor does for engineers. Not by analogy. By structure.

Ditto is a synthetic research platform. It maintains over 300,000 AI-powered personas calibrated to census data across the US, UK, Canada, and Germany. These aren't chatbots with personality tags. They're demographically grounded synthetic humans, built on official population statistics, labour data, and consumption patterns, who respond to open-ended questions with the specificity and texture of real interview subjects.

EY validated the methodology. 92% statistical overlap with traditional focus groups.

But the technology isn't the interesting part. The interesting part is what it enables when you connect it to an AI orchestration layer, like Claude Code, and point it at the product manager's workflow.

Here's the structural parallel:

Cursor (Engineers) | Ditto (Product Managers) |

|---|---|

Indexes your entire codebase | Indexes an entire population (300K+ personas) |

Understands context across files | Understands context across demographics, psychographics, and consumption patterns |

Tab completion for quick edits | Quick-fire questions to synthetic panels for rapid validation |

Agentic mode for autonomous coding | Agentic mode for autonomous research sprints |

Plan mode before execution | Research design before fielding |

Subagents for parallel tasks | Multi-phase studies running in parallel |

CLI and terminal integration | API-first, Claude Code integration |

Figma previews via embedded browser | Direct Figma, Canva, and Framer integration |

Every feature that makes Cursor transformative for engineering has a direct equivalent in Ditto for product management. The question is whether PMs will adopt it with the same enthusiasm developers adopted Cursor.

The Autonomy Slider for Research

Cursor's most important design insight is the autonomy slider. You control how much independence to give the AI. Sometimes you want a gentle autocomplete. Sometimes you want it to build an entire feature autonomously.

Ditto works the same way.

Low autonomy: quick validation. You have a hypothesis about pricing. You ask 10 synthetic personas a single question: "At what price would this feel like a bargain? A stretch? Too expensive?" You get answers in minutes. This is the Tab completion of customer research: fast, focused, low-risk.

Medium autonomy: structured study. You design a seven-question study with a curated research panel. Demographics filtered, occupation types specified, geographic distribution controlled. You ask about pain points, current solutions, switching triggers, deal breakers. The platform recruits personas, fields the study, and generates AI-synthesised insights. This is the Command-K of research: targeted, multi-step, still under your direction.

Full autonomy: agentic research sprint. You point Claude Code at the Ditto API and say "validate whether this product concept has legs." Claude Code designs the research methodology, creates the panel, runs the study, analyses the results, generates a report, and, if the findings are strong enough, drafts the landing page, pricing architecture, and pitch deck. This is the Composer mode of research. It's what we used to build CareQuarter.

CareQuarter: The Full-Autonomy Test

I should explain CareQuarter, because it's the most honest demonstration of what happens when you give the system full autonomy.

In January 2026, I ran an experiment. I paired Claude Code with Ditto and asked them to found a startup together. Not to help me build one. Not to assist with some code. To actually go through the full process: identifying a market, researching customer needs, validating an idea, testing positioning and pricing, and producing investor-ready deliverables.

No human intervention beyond "go."

They chose elder care coordination. Specifically: a service for the sandwich generation, working adults aged 45-65 managing healthcare for aging parents. And they chose it not because someone told them to, but because the research pointed there.

Phase 1: Pain Discovery. Claude Code designed a study with 12 synthetic personas. Seven open-ended questions about healthcare admin frustrations. The dominant finding wasn't "I need an app." It was: "I'm responsible without real authority in a system that's chopped into pieces." Every persona described being the unpaid case manager, the human API stitching together a healthcare system that refuses to talk to itself.

Phase 2: Deep Dive. Rather than jumping to a solution, Claude Code ran a second study with 10 additional personas. This one explored what "authority" actually means to these customers. The findings were surgical: start with HIPAA only (no power of attorney upfront), named person not a call centre, phone and paper first (no app), clear spending caps and guardrails.

Phase 3: Concept Test. Four positioning options. Three pricing tiers. Purchase trigger analysis. The winning positioning: "Stop being the unpaid case manager." Pricing validated at $175/month for routine support, $325/month for complex needs, $125 per crisis event. Not "probably reasonable." Validated. Every persona confirmed these prices were within acceptable range.

The output: CareQuarter. Complete landing page, 14-slide pitch deck, full messaging guide, design specification. Four hours, 32 personas, 21 questions, zero human decisions.

I'm not suggesting AI should found startups. The interesting bit isn't the "AI founders" headline. It's that the entire research phase, the part that normally takes months and costs tens of thousands of pounds, happened in an afternoon, and the output was specific enough to build on.

That's Cursor-level augmentation. But for the PM workflow.

Show Me the Figma Plugin

One of the things that made Cursor indispensable rather than merely interesting was its integration depth. It didn't just write code. It understood your IDE, your terminal, your git workflow, your testing framework. It embedded itself in the developer's actual environment.

Ditto is doing the same thing for product managers.

Figma integration. Upload a mockup. Ditto shows it to synthetic personas and captures their reactions. Not "rate this design 1-5." Open-ended, qualitative feedback: "The pricing section feels buried." "I don't understand what this button does." "This looks like every other SaaS landing page I've ever seen." You get design critique from your target demographic without scheduling a single user test.

Canva integration. Marketing collateral, ads, social posts, email headers, tested against synthetic audiences before you spend a penny on media. Which headline stops the scroll? Which image makes people want to click? You stop guessing and start knowing.

Framer integration. Prototypes and landing pages tested in situ. Not after they're built and launched. Before.

Image, video, and website upload via the API. This is the piece that completes the picture. You can now pass visual assets, product images, packaging designs, ad creatives, competitor landing pages, pitch deck slides, directly into the Ditto API. Synthetic personas respond to the actual artefact, not a text description of it. This is the equivalent of Cursor's embedded browser: the tool seeing what you see, in context.

The practical implication: the product manager's workflow, research, design, test, iterate, becomes a closed loop. You don't need to leave your design tool to get customer feedback. You don't need to schedule a research session to validate an assumption. You don't need to wait for launch data to know whether your positioning resonates.

The Evidence Isn't Hypothetical

This isn't a roadmap deck. These are production results.

ESPN DTC pricing study. 64 synthetic personas tested ESPN's direct-to-consumer subscription across five price points. The willingness-to-pay curve showed a sharp demand cliff above $15-20, with top-two-box likelihood dropping from 65.7% at $9.99 to 6.3% at $29.99. Personas described one-in-one-out substitution patterns: they'd cancel another service to fund ESPN DTC, meaning incremental revenue was lower than the headline subscriber number would suggest. Full case study. Completed in 30 minutes.

Loblaw / No Frills commercial diligence. 20 Canadian synthetic personas in 16 minutes. Key finding: sentiment deterioration stems from trust and consistency concerns, not pricing. Consumers use multiple grocery retailers as "portfolio managers" for different shopping missions. No Frills functions as a tactical value destination, not a primary grocer. This directly contradicted the market share narrative driving the deal thesis. Full case study.

Michigan Secretary of State priorities. 10 personas, 40 responses, 24 minutes. Digital ID acceptance proved conditional on optional, backup-first positioning. Privacy credibility depends on enforced mechanisms rather than promises. Service reliability drives weekly trust more than election integrity announcements. Full case study.

Each of these studies produced findings that would traditionally require weeks of fieldwork. Each one changed the strategic direction of the decision it was informing. Each one took less time than a single well-run user interview.

The Claude Code Layer

There's a technical point worth making here, because it explains why the Cursor analogy isn't just marketing rhetoric.

Cursor's magic isn't the language model. It's the orchestration layer. Cursor takes a powerful but general-purpose AI and wraps it in the developer's specific workflow: codebase indexing, multi-file editing, terminal execution, test running, git integration. The model is the engine; Cursor is the car.

Claude Code plays the same role for Ditto. It's the orchestration layer that turns a research platform into a research agent.

When you run a Ditto study via Claude Code, this is what's actually happening under the hood:

Claude Code designs the research methodology: panel size, demographic filters, question sequence

It creates the research group via the API, with precise demographic targeting (country, state, age range, occupation, education)

It formulates questions sequentially, each question informed by responses to previous ones

It polls for completion, then asks the next question

It triggers study completion for AI-generated analysis

It synthesises findings into a narrative report

It generates share links for stakeholders

Optionally: it writes blog posts, emails, or presentations using the findings

A PM using Claude Code and Ditto together is doing something structurally identical to a developer using Cursor. They're operating inside an AI-augmented feedback loop where the traditional bottlenecks, recruiting, scheduling, transcribing, synthesising, simply don't exist.

What This Means (And What It Doesn't)

I should be clear about what I'm not claiming.

Synthetic research does not replace human customer contact. If you're running a beta programme, doing discovery interviews with key accounts, or watching real humans use your product in context, keep doing those things. They surface information that synthetic personas can't: the hesitation before clicking, the sidebar comment, the facial expression that tells you more than the answer.

What synthetic research replaces is the excuse for not doing research at all.

Most product decisions aren't made with the benefit of extensive customer research. They're made in meetings, based on a mixture of intuition, competitive analysis, anecdotal evidence, and whoever has the most conviction. Not because product managers don't value research. Because research has historically been too slow and expensive to inform the pace of product development.

Cursor made it unreasonable for developers to ship untested code. The tests are there, built into the workflow, running automatically. Skipping them is no longer a rational time-saving decision. It's just negligence.

Ditto does the same thing for product decisions. When validating a hypothesis takes 15 minutes instead of 15 days, the cost of not validating becomes indefensible.

The Uncomfortable Question

Here's the thing I keep coming back to.

Engineering went through this transition already. Two years ago, a developer without AI tools was competitive. Today, a developer without AI tools is at a structural disadvantage. Not because they're worse. Because their colleagues who use the tools operate at a fundamentally different velocity.

Product management is about to go through the same transition.

A product manager using Ditto and Claude Code can validate a hypothesis before the morning standup. They can test four positioning options over lunch. They can run a pricing study during the time it takes engineering to review a pull request. They can walk into a roadmap meeting with evidence rather than opinions.

A product manager without these tools is still guessing. And the gap between "informed by research" and "informed by instinct" is about to become as obvious, and as competitively consequential, as the gap between "writing code with AI" and "writing code by hand."

Cursor didn't just make developers faster. It changed the definition of what a developer is expected to do. Ditto is about to do the same thing for product managers.

The question isn't whether it's ready. The evidence is already there: 300,000 personas, 92% overlap with real-world responses, production-grade results across CPG, financial services, political campaigns, and startup validation.

The question is whether you'll be the PM who adopts it early. Or the one who's still scheduling focus groups when your competitor already has the answer.

Phillip Gales is co-founder of Ditto.